Introduction

We migrate code out of MF to Azure.

Tool we use produces plain good functionally equivalent C# code.

But it turns it's not enough!

So, what's the problem?

Converted code is very slow, especially for batch processing,

where MF completes job, say in 30 minutes, while converted code

finishes in 8 hours.

At this point usually someone appears and whispers in the ear:

Look, those old technologies are proven by the time. It worth to stick to old good Cobol, or better to Assembler if you want to do the real thing.

We're curious though: why is there a difference?

Turns out the issue lies in differences of network topology between MF and Azure solutions.

On MF all programs, database and file storage virtually sit in a single box, thus network latency is negligible.

It's rather usual to see chatty SQL programs on MF that are doing a lot of small SQL queries.

In Azure - programs, database, file storage are different services most certainly sitting in different phisical boxes.

You should be thankfull if they are co-located in a single datacenter.

So, network latency immediately becomes a factor.

Even if it just adds 1 millisecond per SQL roundtrip, it adds up in loops, and turns in the showstopper.

There is no easy workaround on the hardware level.

People advice to write programs differently: "Tune applications and databases for performance in Azure SQL Database".

That's a good advice for a new development but discouraging for migration done by a tool.

So, what is the way forward?

Well, there is one. While accepting weak sides of Azure we can exploit its strong sides.

Parallel refactoring

Before continuing let's consider a code demoing the problem:

public void CreateReport(StringWriter writer)

{

var index = 0;

foreach(var transaction in dataService.

GetTransactions().

OrderBy(item => (item.At, item.SourceAccountId)))

{

var sourceAccount = dataService.GetAccount(transaction.SourceAccountId);

var targetAccount = transaction.TargetAccountId != null ?

dataService.GetAccount(transaction.TargetAccountId) : null;

++index;

if (index % 100 == 0)

{

Console.WriteLine(index);

}

writer.WriteLine($"{index},{transaction.Id},{

transaction.At},{transaction.Type},{transaction.Amount},{

transaction.SourceAccountId},{sourceAccount?.Name},{

transaction.TargetAccountId},{targetAccount?.Name}");

}

}

This cycle queries transactions, along with two more small queries to get source and target accounts for each transaction. Results are printed into a report.

If we assume query latency just 1 millisecond, and try to run such code for 100K transactions we easily come to 200+ seconds of execution.

Reality turns to be much worse. Program spends most of its lifecycle waiting for database results, and iterations don't advance until all work of previous iterations is complete.

We could do better even without trying to rewrite all code!

Let's articulate our goals:

- To make code fast.

- To leave code recognizable.

The idea is to form two processing pipelines:

- (a) one that processes data in parallel out of order;

- (b) other that processes data serially, in original order;

Each pipeline may post sub-tasks to the other, so (a) runs its tasks in parallel unordered, while (b) runs its tasks as if everything was running serially.

So, parallel plan would be like this:

- Queue parallel sub-tasks (a) for each transaction.

- Parallel sub-task in (a) reads source and target accounts, and queues serial sub-task (b) passing transaction and accounts.

- Serial sub-task (b) increments index, and writes report record.

- Wait for all tasks to complete.

To reduce burden of task piplelines we use Dataflow (Task Parallel Library), and encapsulate everything in a small wrapper.

Consider refactored code:

public void CreateReport(StringWriter writer)

{

using var parallel = new Parallel(options.Value.Parallelism);

var index = 0;

parallel.ForEachAsync(

dataService.

GetTransactions().

OrderBy(item => (item.At, item.SourceAccountId)),

transaction =>

{

var sourceAccount = dataService.GetAccount(transaction.SourceAccountId);

var targetAccount = transaction.TargetAccountId != null ?

dataService.GetAccount(transaction.TargetAccountId) : null;

parallel.PostSync(

(transaction, sourceAccount, targetAccount),

data =>

{

var (transaction, sourceAccount, targetAccount) = data;

++index;

if (index % 100 == 0)

{

Console.WriteLine(index);

}

writer.WriteLine($"{index},{transaction.Id},{

transaction.At},{transaction.Type},{transaction.Amount},{

transaction.SourceAccountId},{sourceAccount?.Name},{

transaction.TargetAccountId},{targetAccount?.Name}");

});

});

}

Consider ⬅️ points:

- We create

Parallel utility class passing degree of parallelism requested.

- We iterate transactions using

parallel.ForEachAsync() that queues parallel sub-tasks for each transaction, and then waits until all tasks are complete.

- Each parallel sub-task recieves a transaction. It may be called from a different thread.

- Having recieved required accounts we queue a sub-task for synchronous execution using

parallel.PostSync(), and

- Pass there data collected in parallel sub-task: transaction and accounts.

- We deconstruct data passed into variables, and then proceed with serial logic.

What we achieve with this refactoring:

- Top level query that brings transactions is done and iterated serially.

- But each iteration body is run in parallel. By default we set it up to allow up to 100 parallel executions.

All those parallel sub-task do not wait on each other so their waitings do not add up.

- Sync sub-tasks are queued and executed in order of their serial appearance, so increments and report records are not a subject of race conditions, nor a subject of reordering of output records.

We think that such refactored code is still recognizible.

As for performance this is what log shows:

Serial test

100

...

Execution time: 00:01:33.8152540

Parallel test

100

...

Execution time: 00:00:05.8705468

Reference

Please take a look at project to understand implementation details, and in particular

Parallel class implementing API to post parallel and serial tasks, run cycles and some more.

Please continue reading on GitHub.

As we wrote some time ago, we started to use AI and ML APIs in our applications, but this time we've tried to use Bing chat as a tool.

The initial task was to collect sequences of integer numbers and return a list of pairs integers where the first number is a range's start and the second one is a count of values in the range. For example, for sequence 1, 2, 3, 5, 8, 9 we'd like to get the following results:

1, 3

5, 1

8, 2

We both started to solve this issue. My brother started to play with SSMS using his deep knowledges in SQL and analytic functions, whereas me started to play with Bing chat. Looking ahead, I can state that we both solved the issue using almost the same SQL statement almost in the same time.

Here are my prompts and Bing's answers:

- Prompt:

-

Act as database analyst and software engineer. Given the table (T) with a numeric field (N), which values are orderd ranges of integers. In a range each next value is greater than previous one on 1. When value differs from the previous one more than on 1 then in belongs to another range. Generate select statement that returns rows with two fields. The first field (R) is a number from which a range starts and the second field (C) is count elements of that range:

- Answer:

-

Sure, I can help with that. Here's a SQL query that should do the trick. This query uses a self-join to

identify the start of each range, and then groups by the start of the range to count the number of elements.

```sql

SELECT

start_of_range AS R,

COUNT(*) AS C

FROM (

SELECT

N,

N - ROW_NUMBER() OVER (ORDER BY N) AS start_of_range

FROM T

) subquery

GROUP BY start_of_range

ORDER BY start_of_range;

```

In this query, `start_of_range` is calculated as the difference between the current value of `N` and its row

number (when ordered by `N`). This difference will be the same for all `N` in the same range. Then we group

by `start_of_range` to get the count of elements in each range. The outer query selects the start of each

range (`R`) and the count of elements in that range (`C`).

-

Me, scratching the head, hmmm...

-

Question:

-

This is a bit incorrect SQL select. For example for values 1, 2, 3, 5, 8, 9 I'd like to get the following results:

1, 3

5, 1

8, 2

What a SQL select shall I use to get such results?

- Answer:

-

I apologize for the confusion earlier. You're right, the previous query doesn't handle the scenario you've described correctly.

Here's a corrected SQL query that should give you the desired output. This query uses a window function to compare each row with the previous one and identify the start of a new range. Then it groups by the start of the range to count the elements.

```sql

WITH ranges AS (

SELECT

N,

CASE

WHEN N - LAG(N, 1, N) OVER (ORDER BY N) > 1 THEN 1

ELSE 0

END AS is_start_of_range

FROM T

),

range_groups AS (

SELECT

N,

SUM(is_start_of_range) OVER (ORDER BY N) AS range_group

FROM ranges

)

SELECT

MIN(N) AS R,

COUNT(*) AS C

FROM range_groups

GROUP BY range_group

ORDER BY R;

```

In this query, `is_start_of_range` is a flag that indicates the start of a new range (when the difference between the current and previous `N` is more than 1). `range_group` is a running total of `is_start_of_range` that serves as a unique identifier for each range. The outer query then groups by `range_group` to get the start (`R`) and count (`C`) of each range. I hope this helps! Let me know if you have any other questions.

That answer more satisfied me, and I've checked the following example:

with T as

(

select 10 N

union all

select 11 N

union all

select 30 N

union all

select 31 N

union all

select 32 N

union all

select 50 N

union all

select 80 N

union all

select 81 N

union all

select 82 N

union all

select 83 N

union all

select 90 N

),

ranges AS (

SELECT

N,

CASE

WHEN N - LAG(N, 1, N) OVER (ORDER BY N) > 1 THEN 1

ELSE 0

END AS is_start_of_range

FROM T

),

range_groups AS (

SELECT

N,

SUM(is_start_of_range) OVER (ORDER BY N) AS range_group

FROM ranges

)

SELECT

MIN(N) AS R,

COUNT(*) AS C

FROM range_groups

GROUP BY range_group

ORDER BY R;

The result was:

R C

------------

10 2

30 3

50 1

80 4

90 1

Conclusion:

Nowdays, using AI as a tool, you may improve your performance to the expert level and, may be, study something new...

P.S. Don't fool youself that AI can replace a developer (see the first answer of the chat), but together they can be much stornger than separatelly.

P.P.S. Another interesting solution gave us our colleague. She used an additional field to avoid using analytics function, and she reached the same result:

with T as

(

select 10 ID, 1 N

union all

select 11 ID, 1 N

union all

select 30 ID, 1 N

union all

select 31 ID, 1 N

union all

select 32 ID, 1 N

union all

select 50 ID, 1 N

union all

select 80 ID, 1 N

union all

select 81 ID, 1 N

union all

select 82 ID, 1 N

union all

select 83 ID, 1 N

union all

select 90 ID, 1 N

),

Groups AS (

SELECT

ID,

N,

ROW_NUMBER() OVER (ORDER BY ID) - ID AS GroupNumber

FROM

T

)

SELECT

MIN(ID) AS R,

SUM(N) AS C

FROM

Groups

GROUP BY

GroupNumber

ORDER BY

StartID;

Earlier we wrote that recently we've gotten few tasks related to Machine Learning.

The prerequisites to such task is to collect and prepare the input data.

Usually the required data is scattered across public sites, some of them are in plain text format (or close to it),

but others are accessible as output of public applications. To obtain the required data for such sites

you have to navigate thourgh pages, which often requires keeping state between navigations.

In order to implement this task you need some kind of crawler/scraper of the websites.

Fortunately, there are a lot of frameworks, libraries and tools in C# (and in other languages too) that allow to do this (visit this or this site to see most popular of them), for example:

- ScrapySharp

- ABot

- HtmlAgilityPack

- DotnetSpider

There are pros and cons of using these libraries. Most crucial cons is a lack of support of rich UI based on heavy client-side scripts and client-side state support.

Since not all such libraries implement fully browser emulation and even more, some of them do not support Javascript execution.

So, they suit for gathering information from simple web pages, but no library allows to easy navigate to some page of a web application

that keeps rich client-side state. Even best of them, like ScrapySharp, require heavy programming to achieve the result.

Then, suddenly, we've recalled that already for several years we're using Selenium and web drivers to automate web tests for AngularJS/Angular projects.

After short discussion we came to conclusion that there is no big difference between testing web application and collecting data, since one of testing stages is collecting of actual results (data)

from the tested page, and usually our tests consist of chains of actions performed on consequently visited pages.

This way we came to idea to use WebDriver API implemented by Selenium project.

There are implementations of this API in different languages, and in C# too.

Using WebDriver we easily implement cumbersome navigation of a complex web application and can collect required data. Moreover, it allows to run WebDriver in screenless mode.

Some of its features allow to create a snapshots of virtual screen and store HTML sources that would resulted of Javascript execution. These features are very

useful during run-time troubleshooting. To create a complex web application navigation we need only a bit more knowledge than usual web application's user - we need

to identify somehow pages' elements for example by CSS selectors or by id of HTML elements (as we do this for tests). All the rest, like coockies, view state (if any),

value of hidden fields, some Javascript events will be transparent in this case.

Although one may say that approach with Selenium is rather fat, it's ought to mention that it is rather scalable.

You may either to run several threads with different WebDriver instances in each thread or run several processes simultaneously.

However, beside pros there are cons in the solution with Selenium. They will appear when you'll decide to publish it, e.g. to Azure environment.

Take a note that approach with Selenium requires a browser on the server, there is also a problem with Azure itself, as it's Microsoft's platform

and Selenium is a product of their main competitor Google... So, some issues aren't techincals. The only possible solution is to use PaaS approach

instead of SaaS, but in this case you have to support everything by yourself...

The other problem is that if your application will implement rather aggressive crawling, so either servers where you gather data or your own host might ban it.

So, be gentle, play nice, and implement delays between requests.

Also, take into account that when you're implementing any crawler some problems may appear on law level, since not all web sites allow pull anything you want.

Many sites use terms & conditions that defines rules for the site users (that you cralwer should follow to), otherwise legal actions may

be used against them (or their owners in case of crawler). There is

very interesting article that describes many

pitfalls when you implement your own crawler.

To summarize everything we told early, the Selenium project could be used in many scenarios, and one of them is to create a powerful crawler.

Eventually we've started to deal with tasks that required machine learning. Thus, the good tutorial for ML.NET was required and we had found this one that goes along with good simple codesamples. Thanks to Jeff Prosise. Hope this may be helpfull to you too. Eventually we've started to deal with tasks that required machine learning. Thus, the good tutorial for ML.NET was required and we had found this one that goes along with good simple codesamples. Thanks to Jeff Prosise. Hope this may be helpfull to you too.

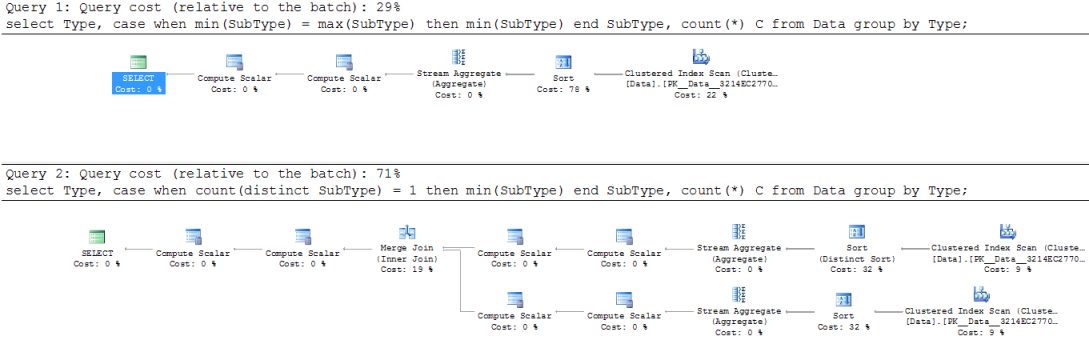

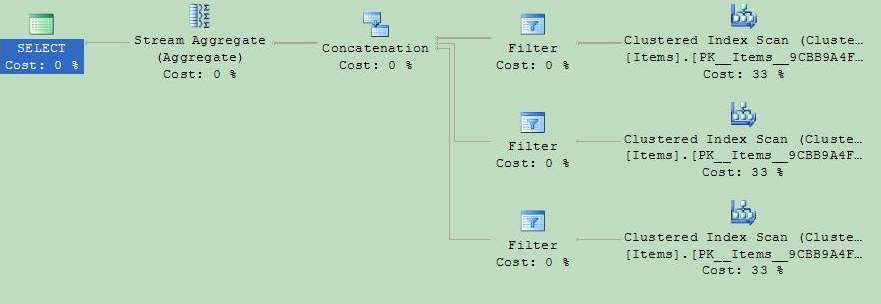

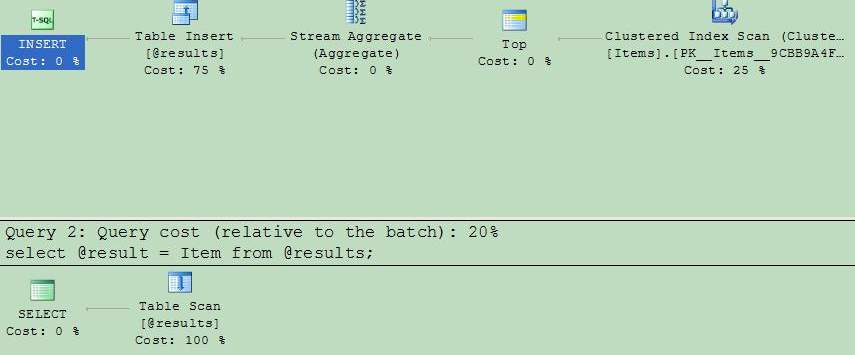

Recently our colleague turned to us and asked to help to deal with some complex query.

It has turned out that the complex part was to understand what he wants to achieve.

After listening to him we have forumulated the task in our words and have confirmed that that is what he wants.

So, that's the task in our formulation:

- Assume you have events.

- Each event acts upon one or more accounts.

- Find all events that act on the same set of accounts.

- Note we deal with mutiple millions of events and accounts.

Data is defined like this:

create table dbo.Event

(

EventID bigint not null,

AccountID varchar(18) not null,

primary key(EventID, AccountID)

);

Requested query turned out to be very simple, yet, not as simple as one would think to account big amout of data:

with D as

(

select * from dbo.Event

),

S as

(

select

EventID,

count(*) Items,

checksum_agg(checksum(AccountID)) Hash

from

D

group by

EventID

)

select

S1.EventID, S2.EventID

from

S S1

inner join

S S2

on

S1.EventID < S2.EventID and

S1.Items = S2.Items and

S1.Hash = S2.Hash and

not exists

(

select AccountID from D where EventID = S1.EventID

except

select AccountID from D where EventID = S2.EventID

);

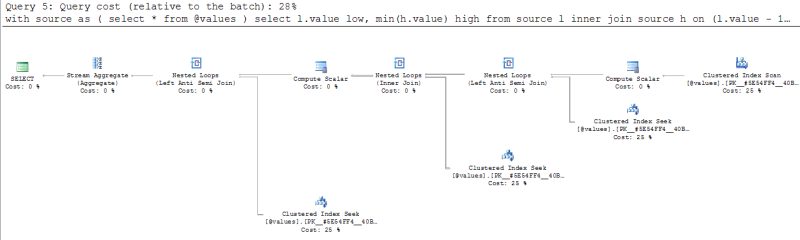

The idea is to:

- calculate a hash derived from list of accounts for each group;

- join groups with the same hash;

- verify that matched groups fit perfectly.

Even simpler solution that does not use hashes is not scaleable, as it's performance is slower than O(N^2), where N - is a number of events. It has unacceptable time with N ~1e4, nothing to say about N ~1e7.

At this point our colleague was already satisfied, as he got result in couple of minutes for a task that he could not even formalize as SQL.

But we felt it could be even better.

We looked at statistics:

with D as

(

select * from dbo.Event

),

S as

(

select

EventID,

count(*) Items

from

D

group by

EventID

)

select

Items, count(*) EventCount

from

S

group by

Items

order by

EventCount desc;

and have seen that most of the events, about 90%, deal with single account,

and all other with two and more (some of them act upon big number of accounts).

The nature of the dataset gave us a hint of more verbose but more fast query:

with D as

(

select * from dbo.Event

),

S as

(

select

EventID,

min(AccountID) AccountID,

count(*) Items,

checksum_agg(checksum(AccountID)) Hash

from

D

group by

EventID

)

select

S1.EventID, S2.EventID

from

S S1

inner join

S S2

on

S1.EventID < S2.EventID and

S1.Items = 1 and

S2.Items = 1 and

S1.AccountID = S2.AccountID

union all

select

S1.EventID, S2.EventID

from

S S1

inner join

S S2

on

S1.EventID < S2.EventID and

S1.Items > 1 and

S2.Items > 1 and

S1.Items = S2.Items and

S1.Hash = S2.Hash and

not exists

(

select AccountID from D where EventID = S1.EventID

except

select AccountID from D where EventID = S2.EventID

);

This query produced results in twenty seconds instead of couple of minutes for a dataset with ~1e7 rows.

Not sure what is use of our Xslt Graph exercises but what we are sure with is that it stresses different parts of Saxon Xslt engine and helps to find and resolve different bugs.

While implementing biconnected components algorithm we incidently run into internal error with Saxon 10.1 with rather simple xslt:

<?xml version="1.0" encoding="utf-8"?>

<xsl:stylesheet version="3.0" xmlns:xsl="http://www.w3.org/1999/XSL/Transform"

xmlns:xs="http://www.w3.org/2001/XMLSchema"

xmlns:array="http://www.w3.org/2005/xpath-functions/array"

exclude-result-prefixes="xs array">

<xsl:template match="/">

<xsl:sequence select="

array:fold-left

(

[8, 9],

(),

function($first as item(), $second as item())

{

min(($first, $second))

}

)"/>

</xsl:template>

</xsl:stylesheet>

More detail can be found at Saxon's issue tracker: Bug #4578: NullPointerException when array:fold-left|right $zero argument is an empty sequence.

Bug is promptly resolved.

People compare these two technologies, and it seems an established fact is that Angular is evolutionally more advanced framework. We're not going to contradict, contrary, we agree with it, but it's better for an opinion to be grounded on facts that one can evaluate and verify.

Fortunately we got a chance to make such a comparison.

We support conversions of Cool:GEN (a legacy CASE tool with roots in 80th) to java or C#. In its time Cool:GEN allowed to greatly automate enterprise development using Mainframes as a server side and Mainframe terminals or Win32 GUIs as clients.

The legacy of this tool are probably hundreds of business and database models, milions of programs generated on COBOL on Mainframes and on C or Java on Windows and Linux. All this runs to this time in many econimic sectors.

Usually the client is some enterprise that invested a lot into design, development and support of their business model using Cool:GEN but now most such clients a trying not to lose this legacy but to convert it into something that goes in parallel with todays technologies.

As original technology is sound, so it is possible to map it to todays Java or C# on server, REST or SOAP as a transport, and Angular, AngularJS or some other on client. Such automatic conversion is an essense of our conversions efforts.

To understand a scope consider a typical enterprise client that has 2-3 thousand windows that are backed by 20-30 thousand programs.

Now, consider that the conversion is done. On output among other things we produce a clean java or C# web application with REST and SOAP interface, and Angular or AngularJS web client that encapsulates those 2-3 thousand windows.

Each window definition is rather small 5-10 KB in html form, but whole mass of windows takes 10-30 MB, which is not small any more.

For AngularJS we generate just those html templates, but for Angular we need to generate separate components for each window that includes typescript class, template and style.

While amout of generated resource for AngularJS stays in those 10-30 MB, generated Angular takes at least 5-10 MB more.

The next step is build.

AngularJS builds distribution that includes all used libraries and a set of templates, and it takes something like a minute from the CPU. Produced output is about 300 KB minified script and those 10-30 MB of templates (multiple files with 5-10 KB each one).

Angular (here we talk about version 9) builds distribution that includes all used libraries and a set of compiled components that are to be loaded lazily on demand. Without of the both angular builder that performs tree shaking build takes days. With tree shaking off it takes 40 minutes. This is the first notable difference. Produced output for ES2015 (latest javascript) is about 1 MB, and 15-100 KB per each compiled component. This is the second notable difference that already impacts end user rather than developer.

The third difference is in the end user experience. Though we have built equalvalent Angular and AngularJS frontend we observe load time of angular is higher. This cannot only be ascribed to bigger file sizes. It seems internal initialization also takes more time for Angular.

So, our experience in this particular test shows that Angular has more room to improve. In particular: compile time, bundle size, runtime speed and simplicity of dynamic loading (we have strong cases when template compilation is not the best approach).

We were asked to help with search service in one enterprise. We were told that their SharePoint portal does not serve their need. Main complaints were about the quality of search results.

They have decided to implement external index of SharePoint content, using Elastic, and expose custom search API within the enterprise.

We questioned their conclusions, asked why did they think Elastic will give much better results, asked did they try to figure out why SharePoint give no desired results.

Answers did not convince us though we have joined the project.

What do you think?

Elastic did not help at all though they hoped very much that its query language will help to rank results in a way that matched documents will be found.

After all they thought it was a problem of ranking of results.

Here we have started our analysis. We took a specific document that must be found but is never returned from search.

It turned to be well known problem, at least we dealt with closely related one in the past. There are two ingredients here:

- documents that have low chances to be found are PDFs;

- we live in Israel, so most texts are Hebrew, which means words are written from right to left, while some other texts from left to right. See Bi-directional text.

Traditionally PDF documents are provided in a way that only distantly resembles logical structure of original content. E.g., paragraphs of texts are often represented as unrelated runs of text lines, or as set of text runs representing single words, or independant characters. No need to say that additional complication comes from that Hebrew text are often represented visually (from left to right, as if "hello" would be stored as "olleh" and would be just printed from right to left). Another common feature of PDF are custom fonts with uncanonical mappings, or images with glyphs of letters.

You can implement these tricks in other document formats but for some reason PDF is only format we have seen that regularly and intensively uses these techniques.

At this point we have realized that it's not a fault of a search engine to find the document but the feature of the PDF to expose its text to a crawler in a form that cannot be used for search.

In fact, PDF cannot search by itself in such documents, as when you try to find some text in the document opened in a pdf viewer, that you clearly see in the document, you often find nothing.

A question. What should you do in this case when no any PDF text extractor can give you correct text but text is there when you looking at document in a pdf viewer?

We decided it's time to go in the direction of image recognition. Thankfully, nowdays it's a matter of available processing resources.

Our goal was:

- Have images of each PDF page. This task is immediately solved with Apache PDFBox (A Java PDF Library) - it's time to say this is java project.

- Run Optical Character Recognition (OCR) over images, and get extracted texts. This is perfectly done by tesseract-ocr/tesseract, and thankfully to its java wrapper bytedeco/javacpp-presets we can immediately call this C++ API from java.

The only small nuisance of tesseract is that it does not expose table recognition info, but we can easily overcome it (we solved this task in the past), as along with each text run tesseract exposes its position.

What are results of the run of such program?

- Full success! It works with high quality of recognition. Indeed, there is no any physical noise that impacts quality.

- Slow speed - up to several seconds per recognition per page.

- Scalable solution. Slow speed can be compensated by almost unlimited theoretical scalability.

So, what is the lesson we have taked from this experience?

Well, you should question yourself, test and verify ideas on the ground before building any theories that will lead you in completely wrong direction. After all people started to realize there was no need to claim on SharePoint, to throw it, and to spend great deal of time and money just to prove that the problem is in the different place.

A sample source code can be found at App.java

In some code we needed to perform a circular shift of a part of array, like on the following picture:

It's clear what to do, especially in case of one element shift but think about "optimal" algorithm that does minimal number of data movemenents.

Here is what we have came up with in C#: algorithm doing single pass over data.

/// <summary>

/// <para>

/// Moves content of list within open range <code>[start, end)</code>.

/// <code>to</code> should belong to that range.

/// </para>

/// <para>

/// <code>list[start + (to - start + i) mod (end - start)] =

/// list[start + i]</code>,

/// where i in range<code>[0, end - start)</ code >.

/// </para>

/// </summary>

/// <typeparam name="T">An element type.</typeparam>

/// <param name="list">A list to move data withing.</param>

/// <param name="start">Start position, including.</param>

/// <param name="end">End position, not incuding.</param>

/// <param name="to">Target position.</param>

public static void CircularMove<T>(IList<T> list, int start, int end, int to)

{

var size = end - start;

var step = to - start;

var anchor = start;

var pos = start;

var item = list[pos];

for(int i = 0; i < size; ++i)

{

pos += step;

if (pos >= end)

{

pos -= size;

}

var next = list[pos];

list[pos] = item;

item = next;

if (pos == anchor)

{

pos = ++anchor;

if (pos >= end)

{

break;

}

item = list[pos];

}

}

}

J2SE has become sole large that its different parts don't play well.

That is pitty but nothing to do. There is probably a lack of resources in Oracle to fill gaps.

So, to the point.

There is relatively new API to work with time defined in: package java.time. There is older API JAXB to serialize and deserialize beans to and from XML (and often to JSON). To JAXB viable, it should be able to deal with basic primitive types. The problem is that JAXB does not handle LocalDate, LocalTime, LocalDateTime, and ZonedDateTime out of the box.

We do understand that:

- JAXB is older and java.time is newer API; and that

- JAXB has no built-in plugin to handle new types.

But this does not help, and we should define/redefine serialization adapters using some drop in code or third party libraries. Here are these convenience adapters:

LocalDateAdapter.java

import java.time.LocalDate;

import java.time.LocalDateTime;

import java.time.ZonedDateTime;

import javax.xml.bind.annotation.adapters.XmlAdapter;

/**

* An adapter for the bean properties of {@link LocalDate} type.

*/

public class LocalDateAdapter extends XmlAdapter<String, LocalDate>

{

/**

* Converts {@link LocalDate} into a string value.

* @param value a value to convert. Can be null.

* @return a string value.

*/

@Override

public String marshal(LocalDate value)

throws Exception

{

return value == null ? null : value.toString();

}

/**

* Converts a string value into a {@link LocalDate}

* instance.

* @param value a value to convert. Can be null.

* @return a {@link LocalDate} instance.

*/

@Override

public LocalDate unmarshal(String value)

throws Exception

{

if (value == null)

{

return null;

}

int p = value.indexOf('T');

if (p < 0)

{

return LocalDate.parse(value);

}

while(++p < value.length())

{

switch(value.charAt(p))

{

case '+':

case '-':

case 'Z':

{

return ZonedDateTime.parse(value).toLocalDate();

}

}

}

return LocalDateTime.parse(value).toLocalDate();

}

}

LocalDateTimeAdapter.java

import java.time.LocalDate;

import java.time.LocalDateTime;

import java.time.LocalTime;

import java.time.ZonedDateTime;

import javax.xml.bind.annotation.adapters.XmlAdapter;

/**

* An adapter for the bean properties of {@link LocalDateTime} type.

*/

public class LocalDateTimeAdapter extends XmlAdapter<String, LocalDateTime>

{

/**

* Converts {@link LocalDateTime} into a string value.

* @param value a value to convert. Can be null.

* @return a string value.

*/

@Override

public String marshal(LocalDateTime value)

throws Exception

{

return value == null ? null : value.toString();

}

/**

* Converts a string value into a {@link LocalDateTime} instance.

* @param value a value to convert. Can be null.

* @return a {@link LocalDateTime} instance.

*/

@Override

public LocalDateTime unmarshal(String value)

throws Exception

{

if (value == null)

{

return null;

}

int p = value.indexOf('T');

if (p < 0)

{

return LocalDateTime.of(LocalDate.parse(value), LocalTime.MIN);

}

while(++p < value.length())

{

switch(value.charAt(p))

{

case '+':

case '-':

case 'Z':

{

return ZonedDateTime.parse(value).toLocalDateTime();

}

}

}

return LocalDateTime.parse(value);

}

}

LocalTimeAdapter.java

import java.time.LocalDate;import java.time.LocalTime;

import javax.xml.bind.annotation.adapters.XmlAdapter;

/**

* An adapter for the bean properties of {@link LocalTime} type.

*/

public class LocalTimeAdapter extends XmlAdapter<String, LocalTime>

{

/**

* Converts {@link LocalTime} into string value.

* @param value a value to convert. Can be null.

* @return a string value

*/

@Override

public String marshal(LocalTime value)

throws Exception

{

return value == null ? null : value.toString();

}

/**

* Converts a string value into a {@link LocalTime} instance.

* @param value a value to convert. Can be null.

* @return a {@link LocalTime} instance.

*/

@Override

public LocalTime unmarshal(String value)

throws Exception

{

return value == null ? null : LocalTime.parse(value);

}

}

To make them work either field/properties or package should be annotated with JAXB xml adapters.

The simplest way is to annotate it on package level like this:

package-info.java

@XmlJavaTypeAdapters(

{

@XmlJavaTypeAdapter(value = LocalDateAdapter.class, type = LocalDate.class),

@XmlJavaTypeAdapter(value = LocalTimeAdapter.class, type = LocalTime.class),

@XmlJavaTypeAdapter(value = LocalDateTimeAdapter.class, type = LocalDateTime.class)

})

package com.nesterovskyBros.demo.entities;

import java.time.LocalDate;

import java.time.LocalDateTime;

import java.time.LocalTime;

import javax.xml.bind.annotation.adapters.XmlJavaTypeAdapter;

import javax.xml.bind.annotation.adapters.XmlJavaTypeAdapters;

We've run into following java function that models some variation of NULL arithmetic:

public static Long add(Long value1, Long value2)

{

return value1 == null ? value2 : value2 == null ? value1 : value1 + value2;

}

When this function runs the outcome is different from what we have expected.

Here is a quiz:

What is outcome of add(1, 2):

3;null;- other.

What is outcome of add(3, null):

3;null;- other.

What is outcome of add(null, 4):

null;4;- other.

What is outcome of add(null, null):

null;0;- other.

Our assumptions were:

add(1, 2) == 3;add(3, null) == 3;add(null, 4) == 4;add(null, null) == null;

Java works differently:

add(1, 2) == 3;add(3, null) throws NullPointerException;add(null, 4) throws NullPointerException;add(null, null) throws NullPointerException;

The problem is with compile time type of ternary ?: operator. Compiler decides it's long, while we intuitively expected Long. Java casts null to long (which results into NPE), and then to Long.

Correct code would be:

public static Long add(Long value1, Long value2)

{

if (value1 == null)

{

return value2;

}

else if (value2 == null)

{

return value;

}

else

{

return value1 + value2;

}

}

This version does not cast anything to long, and works as we originally expected.

Honestly, we're a bit anexious about this subtle difference of if-then-else and ?: operator.

Our recent task required us to find all sets of not intersecting rectangles for a rectangle list.

At first glance it did not look like a trivial task. Just consider that for a list of N rectangles you can form

2^N different subsets. So, even result list, theoretically, can be enormous.

Fortunately, we knew that our result will be manageable in size. But nevertheless, suppose you have a list of

couple of hundred rectangles, how would you enumerate all different sets of rectangles?

By the way, this task sounds the same as one of a Google interview's question. So, you may try to solve it by yourself before to check our solution.

We didn't even dare to think of brute-force solution: to enumerate all sets and then check each one whether it fits our needs.

Instead we used induction:

- Suppose S(N) - is an solution for our task for N rectangles R(n), where S(N) is a set of sets of rectangles;

- Then solution for S(N+1) will contain whole S(N), R(N+1) - a set consisting of single rectangle, and

some sets of rectangles from S(N) combinded with R(N+1) provided they fit the condition;

- S(0) - is an empty set.

The algorithm was implemented in java, and at first it was using

Streaming and recursion.

Then we have figured out that we can use

Stream.reduce or Stream.collect to implement

the same algorithm. That second implementation was a little bit longer but probably faster, and besides it used standard idioms.

But then at last step we reformulated the algorithms in terms of

Collections.

Though the final implementation is the least similar to original induction algorithm,

it's straightforward and definitely fastest among all implementations we tried.

So, here is the code:

/**

* For a sequence of items builds a list of matching groups.

* @param identity an identity instance used for the group.

* @param items original sequence of items.

* @param matcher a group matcher of item against a group.

* @param combiner creates a new group from a group (optional) and an item.

* @return a list of matching groups.

*/

public static <T, G> List<G> matchingGroups(

G identity,

Iterable<T> items,

BiPredicate<G, T> matcher,

BiFunction<G, T, G> combiner)

{

ArrayList<G> result = new ArrayList<>();

for(T item: items)

{

int size = result.size();

result.add(combiner.apply(identity, item));

for(int i = 0; i < size; ++i)

{

G group = result.get(i);

if (matcher.test(group, item))

{

result.add(combiner.apply(group, item));

}

}

}

return result;

}

The sample project on GitHub contains implementation and a tests of this algorithm.

It's very old theme...

Many years ago we have defined a .NET wrapper around Windows Uniscribe API.

Uniscribe API is used to render bidirectional languages like Hebrew, so it's important mainly here in Israel.

Once in a while we get request from people to give that API, so we published it on GitHub at https://github.com/nesterovsky-bros/BidiVisualConverter.

You're welcome to use it!

We have solved this problem years ago, but have run into it once again.

So, we shall log the solution here.

The problem: to minify payload of the JAXB serialized beans.

Java beans have many properties most of them contains default values: zero ints, empty strings, and so on.

JAXB never tries to omit default value from marshalled xml, the only thing it can remove from output is null values. So, our approach is to define xml adapter to map default values to nulls.

Here we refer to the StackOverflow question: Prevent writing default attribute values JAXB, and to our answer.

Though it's not as terse as one would wish, one can create XmlAdapters to avoid marshalling the default values.

The use case is like this:

@XmlRootElement(name = "FIELD")

public class TestLayoutNode

{

@XmlAttribute(name = "num")

@XmlJavaTypeAdapter(value = IntegerZero.class, type = int.class)

public int number;

@XmlAttribute(name = "str")

@XmlJavaTypeAdapter(StringDefault.class)

public String str = "default";

}

And here are adapters.

IntegerZero:

public class IntegerZero extends DefaultValue<Integer>

{

public Integer defaultValue() { return 0; }

}

StringDefault:

public class StringDefault extends DefaultValue<String>

{

public String defaultValue() { return "default"; }

}

DefaultValueAdapter:

public class DefaultValue<T> extends XmlAdapter<T, T>

{

public T defaultValue() { return null; }

public T marshal(T value) throws Exception

{

return (value == null) || value.equals(defaultValue()) ? null : value;

}

public T unmarshal(T value) throws Exception

{

return value;

}

}

With small number of different default values this approach works well.

Though ADO.NET and other ORM framworks like EntityFramework and Dapper support async pattern, you should remember that database drivers (at least all we know about) do not support concurrent db commands running against a single connection.

To see what we mean consider a bug we have recently identified. Consider a code:

await Task.WhenAll(

newImages.

Select(

async image =>

{

// Load data from url.

image.Content = await HttpUtils.ReadData(image.Url);

// Insert image into the database.

image.ImageID = await context.InsertImage(image);

}));

The code runs multiple tasks to read images, and to write them into a database.

Framework decides to run all these tasks in parallel. HttpUtils.ReadData() has no problem with parallel execution, while context.InsertImage() does not run well in parallel, and is a subject of race conditions.

To workaround the problem we had to use async variant of a critical section. So the fixed code looks like this:

using(var semaphore = new SemaphoreSlim(1))

{

await Task.WhenAll(

newImages.

Select(

async image =>

{

// Load data from url.

image.Content = await HttpUtils.ReadData(image.Url);

await semaphore.WaitAsync();

try

{

// Insert image into the database.

image.ImageID = await context.InsertImage(image);

}

finally

{

semaphore.Release();

}

}));

}

So, in the async world we still should care about race conditions.

In our angularjs projects we are often dealing with existing models that do not always fit to angularjs expectations.

Here is an example.

There is a model consisting of two arrays: for data, and for associated data. How to create an ng-repeat that displays data from both sources?

Consider a test controller (see a github sources, and a rawgit working sample):

model.controller(

"Test",

function()

{

this.records =

[

{ name: "record 1", state: "Draft" },

{ name: "record 2", state: "Public" },

{ name: "record 3", state: "Disabled" },

{ name: "record 4", state: "Public" },

{ name: "record 5", state: "Public" }

];

this.more =

[

{ value: 1, selected: true, visible: true },

{ value: 2, selected: false, visible: true },

{ value: 3, selected: true, visible: true },

{ value: 4, selected: false, visible: false },

{ value: 5, selected: false, visible: true }

];

this.delete = function(index)

{

this.records.splice(index, 1);

this.more.splice(index, 1);

};

});

Basically there are three approaches here:

- Change model.

- Adapt model to a single collection.

ng-repeat over first array and access the second array using $index scope variable.

We argued like this:

- It is often not an option to change a model, as it's how business data are described.

-

A model adaptation when we build a single collection from original two collections, and synchronize it back (if required) may unnecessary complicate things.

-

Thus let's get associated items by

$index variable.

This is an example of ng-repeat use:

<table border="1">

<tr>

<th>[x]</th>

<th>Name</th>

<th>Value</th>

<th>State</th>

<th>Actions</th>

</tr>

<tr ng-repeat="item in test.records track by $index"

ng-if="test.more[$index].visible">

<td>

<input type="checkbox" ng-model="test.more[$index].selected"/>

</td>

<td>{{item.name}}</td>

<td>{{test.more[$index].value}}</td>

<td>{{item.state}}</td>

<td>

<a href="#" ng-click="test.delete($index)">Delete</a>

</td>

</tr>

</table>

Look at how associated data is accessed: test.more[$index]... Our goal was to optimize that repeating parts, so we looked at ng-init directive.

Though docs warn about its use: "the only appropriate use of ngInit is for aliasing special properties of ngRepeat", we thought that our use of ng-init is rather close to what docs state, so we tried the following:

...

<tr ng-repeat="item in test.records track by $index"

ng-init="more = test.more[$index]"

ng-if="more.visible">

<td>

<input type="checkbox" ng-model="more.selected"/>

</td>

<td>{{item.name}}</td>

<td>{{more.value}}</td>

<td>{{item.state}}</td>

<td>

<a href="#" ng-click="test.delete($index)">Delete</a>

</td>

</tr>

...

This code just does not work, as it shows empty table, as if ng-if is always evaluated to false. From docs we found the reason:

- the priority of the directive

ng-if is higher than the prirority of the ng-init, and besides ng-if is a terminal directive;

-

as result

ng-if directive is bound, and ng-init is not;

- when

ng-if is evaluated no $scope.more is defined, so more.visible is evaluated to false.

To workaround ng-init/ng-if problem we refactored ng-if as ng-if-start/ng-if-end:

...

<tr ng-repeat="item in test.records track by $index"

ng-init="more = test.more[$index]">

<td ng-if-start="more.visible">

<input type="checkbox" ng-model="more.selected"/>

</td>

<td>{{item.name}}</td>

<td>{{more.value}}</td>

<td>{{item.state}}</td>

<td ng-if-end>

<a href="#" ng-click="test.delete($index)">Delete</a>

</td>

</tr>

...

This code works much better and shows a correct content. But then click "Delete" for a row with Name "record 2" and you will find that updated table is out of sync for all data that come from test.more array.

So, why the data goes out of sync? The reason is in the way how the ng-init is implemented: its expression is evaluated just once at directive's pre-link phase. So, the value of $scope.more will persist for the whole ng-init's life cycle, and it does not matter that test.mode[$index] may have changed at some point.

At this point we have decided to introduce a small directive named ui-eval that will act in a way similar to ng-init but that:

- will run before

ng-if;

- will be re-evaluated when it's value is changed.

This is it:

module.directive(

"uiEval",

function()

{

var directive =

{

restrict: 'A',

priority: 700,

link:

{

pre: function(scope, element, attr)

{

scope.$watch(attr["uiEval"]);

}

}

};

return directive;

});

The ui-eval version of the markup is:

...

<tr ng-repeat="item in test.records track by $index"

ui-eval="more = test.more[$index]"

ng-if="more.visible">

<td>

<input type="checkbox" ng-model="more.selected"/>

</td>

<td>{{item.name}}</td>

<td>{{more.value}}</td>

<td>{{item.state}}</td>

<td>

<a href="#" ng-click="test.delete($index)">Delete</a>

</td>

</tr>

...

It works as expected both during initial rendering and when model is updated.

We consider ui-eval is a "better" ng-init as it solves ng-init's silent limitations. On the other hand it should not try to evaluate any complex logic, as it can be often re-evaluated, so its use case is to alias a sub-expression. It can be used in any context and is not limited to items of ng-repeat.

Source code can be found at github, and a working sample at rawgit.

Stackoverfow shows that people are searching How to intercept $resource requests.

Recently we have written about the way to cancel angularjs $resource requests (see Cancel angularjs resource request).

Here we apply the same technique to intercept resource request.

Consider a sample (nesterovsky-bros/angularjs-api/master/angularjs/transform-request.html):

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8" />

<title>Intercept resource request</title>

<style type="text/css">.ng-cloak { display: none; }</style>

<script src="angular.js"></script>

<script src="angular-resource.js"></script>

<script>

angular.module("app", ["ngResource"]).

factory(

"services",

["$resource", function ($resource)

{

return $resource(

"http://md5.jsontest.com/",

{},

{

MD5:

{

method: "GET",

params: { text: null },

then: function (resolve)

{

this.params.text = "***" + this.params.text + "***";

this.then = null;

resolve(this);

}

},

});

}]).

controller(

"Test",

["services", function (services)

{

this.value = "Sample text";

this.call = function()

{

this.result = services.MD5({ text: this.value });

}

}]);

</script>

</head>

<body ng-app="app" ng-controller="Test as test">

<label>Text: <input type="text" ng-model="test.value" /></label>

<input type="button" value="call" ng-click="test.call()"/>

<div ng-bind="test.result.md5"></div>

</body>

</html>

How it works.

$resource merges action definition, request params and data to build a config parameter for an $http request.- a

config parameter passed into an $http request is treated as a promise like object, so it may contain then function to initialize config.

- action's

then function may transform request as it wishes.

The demo can be found at transform-request.html

Often we need to keep a client session state in our angularjs application.

This state should survive page refresh and navigations within the application.

Earlier we used ngStorage module but lately have changed our opinion, as we think it's over-engineered and is too heavy at runtime.

We have replaced it with a simple service that synchronizes sessionStorage once during initialization, and once before page unload.

Look at an example (session.html):

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8" />

<title>Session</title>

<style type="text/css">

.ng-cloak { display: none; }

</style>

<script src="angular.js"></script>

<script>

angular.module("app", []).

factory(

"session",

["$window", function($window)

{

var session =

angular.fromJson($window.sessionStorage.getItem("app")) || {};

$window.addEventListener(

"beforeunload",

function()

{

$window.sessionStorage.setItem("app", angular.toJson(session));

})

return session;

}]).

controller(

"Test",

["session",

function(session)

{

this.state = session;

}]);

</script>

</head>

<body ng-app="app" ng-controller="Test as test">

<input type="text" ng-model="test.state.value"/>

<a href="session.html?p=1">Page 1</a>

<a href="session.html?p=2">Page 2</a>

</body>

</html>

Source can be found at nesterovsky-bros/angularjs-api/services/session.html.

Earlier this year Mike Wasson has published a post: "Dependency Injection in ASP.NET Web API 2" that describes Web API's approach to the Dependency Injection design pattern.

In short it goes like this:

- Web API provides a primary integration point through

HttpConfiguration.DependencyResolver property, and tries to obtain many services through this resolver;

- Web API suggests to use your favorite Dependecy Injection library through the integration point. Author lists following libraries: Unity (by Microsoft), Castle Windsor, Spring.Net, Autofac, Ninject, and StructureMap.

The Unity Container (Unity) is a lightweight, extensible dependency injection container. There are Nugets both for Unity library and for Web API integration.

Now to the point of this post.

Unity defines a hierarchy of injection scopes. In Web API they are usually mapped to application and request scopes. This way a developer can inject application singletons, create request level, or transient objects.

Everything looks reasonable. The only problem we have found is that there is no way you to inject Web API objects like HttpConfiguration, HttpControllerContext or request's CancellationToken, as they are never registered for injection.

To workaround this we have created a small class called UnityControllerActivator that perfroms required registration:

using System;

using System.Net.Http;

using System.Threading;

using System.Threading.Tasks;

using System.Web.Http.Controllers;

using System.Web.Http.Dispatcher;

using Microsoft.Practices.Unity;

/// <summary>

/// Unity controller activator.

/// </summary>

public class UnityControllerActivator: IHttpControllerActivator

{

/// <summary>

/// Creates an UnityControllerActivator instance.

/// </summary>

/// <param name="activator">Base activator.</param>

public UnityControllerActivator(IHttpControllerActivator activator)

{

if (activator == null)

{

throw new ArgumentException("activator");

}

this.activator = activator;

}

/// <summary>

/// Creates a controller wrapper.

/// </summary>

/// <param name="request">A http request.</param>

/// <param name="controllerDescriptor">Controller descriptor.</param>

/// <param name="controllerType">Controller type.</param>

/// <returns>A controller wrapper.</returns>

public IHttpController Create(

HttpRequestMessage request,

HttpControllerDescriptor controllerDescriptor,

Type controllerType)

{

return new Controller

{

activator = activator,

controllerType = controllerType

};

}

/// <summary>

/// Base controller activator.

/// </summary>

private readonly IHttpControllerActivator activator;

/// <summary>

/// A controller wrapper.

/// </summary>

private class Controller: IHttpController, IDisposable

{

/// <summary>

/// Base controller activator.

/// </summary>

public IHttpControllerActivator activator;

/// <summary>

/// Controller type.

/// </summary>

public Type controllerType;

/// <summary>

/// A controller instance.

/// </summary>

public IHttpController controller;

/// <summary>

/// Disposes controller.

/// </summary>

public void Dispose()

{

var disposable = controller as IDisposable;

if (disposable != null)

{

disposable.Dispose();

}

}

/// <summary>

/// Executes an action.

/// </summary>

/// <param name="controllerContext">Controller context.</param>

/// <param name="cancellationToken">Cancellation token.</param>

/// <returns>Response message.</returns>

public Task<HttpResponseMessage> ExecuteAsync(

HttpControllerContext controllerContext,

CancellationToken cancellationToken)

{

if (controller == null)

{

var request = controllerContext.Request;

var container = request.GetDependencyScope().

GetService(typeof(IUnityContainer)) as IUnityContainer;

if (container != null)

{

container.RegisterInstance<HttpControllerContext>(controllerContext);

container.RegisterInstance<HttpRequestMessage>(request);

container.RegisterInstance<CancellationToken>(cancellationToken);

}

controller = activator.Create(

request,

controllerContext.ControllerDescriptor,

controllerType);

}

controllerContext.Controller = controller;

return controller.ExecuteAsync(controllerContext, cancellationToken);

}

}

}

Note on how it works.

IHttpControllerActivator is a controller factory, which Web API uses to create new controller instances using IHttpControllerActivator.Create(). Later controller's IHttpController.ExecuteAsync() is called to run the logic.-

UnityControllerActivator replaces original controller activator with a wrapper that delays creation (injection) of real controller untill request objects are registered in the scope

To register this class one need to update code in the UnityWebApiActivator.cs (file added with nuget Unity.AspNet.WebApi)

public static class UnityWebApiActivator

{

/// <summary>Integrates Unity when the application starts.<summary>

public static void Start()

{

var config = GlobalConfiguration.Configuration;

var container = UnityConfig.GetConfiguredContainer();

container.RegisterInstance<HttpConfiguration>(config);

container.RegisterInstance<IHttpControllerActivator>(

new UnityControllerActivator(config.Services.GetHttpControllerActivator()));

config.DependencyResolver = UnityHierarchicalDependencyResolver(container);

}

...

}

With this addition we have simplified the boring problem with passing of CancellationToken all around the code, as controller (and other classes) just declared a property to inject:

public class MyController: ApiController

{

[Dependency]

public CancellationToken CancellationToken { get; set; }

[Dependency]

public IModelContext Model { get; set; }

public async Task<IEnumerable<Products>> GetProducts(...)

{

...

}

public async Task<IEnumerable<Customer>> GetCustomer(...)

{

...

}

...

}

...

public class ModelContext: IModelContext

{

[Dependency]

public CancellationToken CancellationToken { get; set; }

...

}

And finally to perform unit tests for controllers with Depenency Injection you can use a code like this:

using System.Threading;

using System.Threading.Tasks;

using System.Web.Http;

using System.Web.Http.Controllers;

using System.Web.Http.Dependencies;

using System.Net.Http;

using Microsoft.Practices.Unity;

using Microsoft.Practices.Unity.WebApi;

using Microsoft.VisualStudio.TestTools.UnitTesting;

[TestClass]

public class MyControllerTest

{

[ClassInitialize]

public static void Initialize(TestContext context)

{

config = new HttpConfiguration();

Register(config);

}

[ClassCleanup]

public static void Cleanup()

{

config.Dispose();

}

[TestMethod]

public async Task GetProducts()

{

var controller = CreateController<MyController>();

//...

}

public static T CreateController<T>(HttpRequestMessage request = null)

where T: ApiController

{

if (request == null)

{

request = new HttpRequestMessage();

}

request.SetConfiguration(config);

var controllerContext = new HttpControllerContext()

{

Configuration = config,

Request = request

};

var scope = request.GetDependencyScope();

var container = scope.GetService(typeof(IUnityContainer))

as IUnityContainer;

if (container != null)

{

container.RegisterInstance<HttpControllerContext>(controllerContext);

container.RegisterInstance<HttpRequestMessage>(request);

container.RegisterInstance<CancellationToken>(CancellationToken.None);

}

T controller = scope.GetService(typeof(T)) as T;

controller.Configuration = config;

controller.Request = request;

controller.ControllerContext = controllerContext;

return controller;

}

public static void Register(HttpConfiguration config)

{

config.DependencyResolver = CreateDependencyResolver(config);

}

public static IDependencyResolver CreateDependencyResolver(HttpConfiguration config)

{

var container = new UnityContainer();

container.RegisterInstance<HttpConfiguration>(config);

// TODO: configure Unity contaiener.

return new UnityHierarchicalDependencyResolver(container);

}

public static HttpConfiguration config;

}

P.S. To those who think Dependency Injection is an universal tool, please read the article: Dependency Injection is Evil.

Earlier this year Mike Wasson has published a post: "Dependency Injection in ASP.NET Web API 2" that describes Web API's approach to the Dependency Injection design pattern.

In short it goes like this:

- Web API provides a primary integration point through

HttpConfiguration.DependencyResolver property, and tries to obtain many services through this resolver;

- Web API suggests to use your favorite Dependecy Injection library through the integration point. Author lists following libraries: Unity (by Microsoft), Castle Windsor, Spring.Net, Autofac, Ninject, and StructureMap.

The Unity Container (Unity) is a lightweight, extensible dependency injection container. There are Nugets both for Unity library and for Web API integration.

Now to the point of this post.

Unity defines a hierarchy of injection scopes. In Web API they are usually mapped to application and request scopes. This way a developer can inject application singletons, create request level, or transient objects.

Everything looks reasonable. The only problem we have found is that there is no way you to inject Web API objects like HttpConfiguration, HttpControllerContext or request's CancellationToken, as they are never registered for injection.

To workaround this we have created a small class called UnityControllerActivator that perfroms required registration:

using System;

using System.Net.Http;

using System.Threading;

using System.Threading.Tasks;

using System.Web.Http.Controllers;

using System.Web.Http.Dispatcher;

using Microsoft.Practices.Unity;

/// <summary>

/// Unity controller activator.

/// </summary>

public class UnityControllerActivator: IHttpControllerActivator

{

/// <summary>

/// Creates an UnityControllerActivator instance.

/// </summary>

/// <param name="activator">Base activator.</param>

public UnityControllerActivator(IHttpControllerActivator activator)

{

if (activator == null)

{

throw new ArgumentException("activator");

}

this.activator = activator;

}

/// <summary>

/// Creates a controller wrapper.

/// </summary>

/// <param name="request">A http request.</param>

/// <param name="controllerDescriptor">Controller descriptor.</param>

/// <param name="controllerType">Controller type.</param>

/// <returns>A controller wrapper.</returns>

public IHttpController Create(

HttpRequestMessage request,

HttpControllerDescriptor controllerDescriptor,

Type controllerType)

{

return new Controller

{

activator = activator,

controllerType = controllerType

};

}

/// <summary>

/// Base controller activator.

/// </summary>

private readonly IHttpControllerActivator activator;

/// <summary>

/// A controller wrapper.

/// </summary>

private class Controller: IHttpController, IDisposable

{

/// <summary>

/// Base controller activator.

/// </summary>

public IHttpControllerActivator activator;

/// <summary>

/// Controller type.

/// </summary>

public Type controllerType;

/// <summary>

/// A controller instance.

/// </summary>

public IHttpController controller;

/// <summary>

/// Disposes controller.

/// </summary>

public void Dispose()

{

var disposable = controller as IDisposable;

if (disposable != null)

{

disposable.Dispose();

}

}

/// <summary>

/// Executes an action.

/// </summary>

/// <param name="controllerContext">Controller context.</param>

/// <param name="cancellationToken">Cancellation token.</param>

/// <returns>Response message.</returns>

public Task<HttpResponseMessage> ExecuteAsync(

HttpControllerContext controllerContext,

CancellationToken cancellationToken)

{

if (controller == null)

{

var request = controllerContext.Request;

var container = request.GetDependencyScope().

GetServices(typeof(IUnityContainer)) as IUnityContainer;

if (container != null)

{

container.RegisterInstance<HttpControllerContext>(controllerContext);

container.RegisterInstance<HttpRequestMessage>(request);

container.RegisterInstance<CancellationToken>(cancellationToken);

}

controller = activator.Create(

request,

controllerContext.ControllerDescriptor,

controllerType);

}

controllerContext.Controller = controller;

return controller.ExecuteAsync(controllerContext, cancellationToken);

}

}

}

Note on how it works.

IHttpControllerActivator is a controller factory, which Web API uses to create new controller instances using IHttpControllerActivator.Create(). Later controller's IHttpController.ExecuteAsync() is called to run the logic.-

UnityControllerActivator replaces original controller activator with a wrapper that delays creation (injection) of real controller untill request objects are registered in the scope

To register this class one need to update code in the UnityWebApiActivator.cs (file added with nuget Unity.AspNet.WebApi)

public static class UnityWebApiActivator

{

/// <summary>Integrates Unity when the application starts.<summary>

public static void Start()

{

var config = GlobalConfiguration.Configuration;

var container = UnityConfig.GetConfiguredContainer();

container.RegisterInstance<HttpConfiguration>(config);

container.RegisterInstance<IHttpControllerActivator>(

new UnityControllerActivator(config.Services.GetHttpControllerActivator()));

config.DependencyResolver = UnityHierarchicalDependencyResolver(container);

}

...

}

With this addition we have simplified the boring problem with passing of CancellationToken all around the code, as controller (and other classes) just declared a property to inject:

public class MyController: ApiController

{

[Dependency]

public CancellationToken CancellationToken { get; set; }

[Dependency]

public IModelContext Model { get; set; }

public async Task<IEnumerable<Products>> GetProducts(...)

{

...

}

public async Task<IEnumerable<Customer>> GetCustomer(...)

{

...

}

...

}

...

public class ModelContext: IModelContext

{

[Dependency]

public CancellationToken CancellationToken { get; set; }

...

}

And finally to perform unit tests for controllers with Depenency Injection you can use a code like this:

using System.Threading;

using System.Threading.Tasks;

using System.Web.Http;

using System.Web.Http.Controllers;

using System.Web.Http.Dependencies;

using System.Net.Http;

using Microsoft.Practices.Unity;

using Microsoft.Practices.Unity.WebApi;

using Microsoft.VisualStudio.TestTools.UnitTesting;

[TestClass]

public class MyControllerTest

{

[ClassInitialize]

public static void Initialize(TestContext context)

{

config = new HttpConfiguration();

Register(config);

}

[ClassCleanup]

public static void Cleanup()

{

config.Dispose();

}

[TestMethod]

public async Task GetProducts()

{

var controller = CreateController<MyController>();

//...

}

public static T CreateController<T>(HttpRequestMessage request = null)

where T: ApiController

{

if (request == null)

{

request = new HttpRequestMessage();

}

request.SetConfiguration(config);

var controllerContext = new HttpControllerContext()

{

Configuration = config,

Request = request

};

var scope = request.GetDependencyScope();

var container = scope.GetService(typeof(IUnityContainer))

as IUnityContainer;

if (container != null)

{

container.RegisterInstance<HttpControllerContext>(controllerContext);

container.RegisterInstance<HttpRequestMessage>(request);

container.RegisterInstance<CancellationToken>(CancellationToken.None);

}

T controller = scope.GetService(typeof(T)) as T;

controller.Configuration = config;

controller.Request = request;

controller.ControllerContext = controllerContext;

return controller;

}

public static void Register(HttpConfiguration config)

{

config.DependencyResolver = CreateDependencyResolver(config);

}

public static IDependencyResolver CreateDependencyResolver(HttpConfiguration config)

{

var container = new UnityContainer();

container.RegisterInstance<HttpConfiguration>(config);

// TODO: configure Unity contaiener.

return new UnityHierarchicalDependencyResolver(container);

}

public static HttpConfiguration config;

}

P.S. To those who think Dependency Injection is an universal tool, please read the article: Dependency Injection is Evil.

Looking at Guava Cache we think its API is more convenient than .NET's Cache API.

Just consider:

-

.NET has getters, and setters of objects by string keys.

You should provide caching policy with each setter.

-

Guava cache operates with typed storage of Key to Value.

Provides a value factory and a caching policy in advance at cache construction.

Guava's advantange is based on an idea that homogenous storage assumes a uniform way of creation of values, and uniform caching policy. Thus a great part of logic is factored out into a cache initialization.

We have decided to create a simple adapter of the MemoryCache to achieve the same goal. Here is a result of such an experiment:

public class Cache<K, V>

where V: class

{

/// <summary>

/// A cache builder.

/// </summary>

public struct Builder

{

/// <summary>

/// A memory cache. If not specified then MemoryCache.Default is used.

/// </summary>

public MemoryCache MemoryCache;

/// <summary>

/// An expiration value.

/// Alternatively CachePolicyFunc can be used.

/// </summary>

public TimeSpan Expiration;

/// <summary>

/// Indicates whether to use sliding (true), or absolute (false)

/// expiration.

/// Alternatively CachePolicyFunc can be used.

/// </summary>

public bool Sliding;

/// <summary>

/// Optional function to get caching policy.

/// Alternatively Expiration and Sliding property can be used.

/// </summary>

public Func<V, CacheItemPolicy> CachePolicyFunc;

/// <summary>

/// Optional value validator.

/// </summary>

public Func<V, bool> Validator;

/// <summary>

/// A value factory.

/// Alternatively FactoryAsync can be used.

/// </summary>

public Func<K, V> Factory;

/// <summary>

/// Async value factory.

/// Alternatively Factory can be used.

/// </summary>

public Func<K, Task<V>> FactoryAsync;

/// <summary>

/// A key to string converter.

/// </summary>

public Func<K, string> KeyFunc;

/// <summary>

/// Converts builder to a Cache<K, V> instance.

/// </summary>

/// <param name="builder">A builder to convert.</param>

/// <returns>A Cache<K, V> instance.</returns>

public static implicit operator Cache<K, V>(Builder builder)

{

return new Cache<K, V>(builder);

}

}

/// <summary>

/// Creates a cache from a cache builder.

/// </summary>

/// <param name="builder">A cache builder instance.</param>

public Cache(Builder builder)

{

if ((builder.Factory == null) && (builder.FactoryAsync == null))

{

throw new ArgumentException("builder.Factory");

}

if (builder.MemoryCache == null)

{

builder.MemoryCache = MemoryCache.Default;

}

this.builder = builder;

}

/// <summary>

/// Cached value by key.

/// </summary>

/// <param name="key">A key.</param>

/// <returns>A cached value.</returns>

public V this[K key]

{

get { return Get(key); }

set { Set(key, value); }

}

/// <summary>

/// Sets a value for a key.

/// </summary>

/// <param name="key">A key to set.</param>

/// <param name="value">A value to set.</param>

public void Set(K key, V value)

{

SetImpl(GetKey(key), IsValid(value) ? value : null);

}

/// <summary>

/// Gets a value for a key.

/// </summary>

/// <param name="key">A key to get value for.</param>

/// <returns>A value instance.</returns>

public V Get(K key)

{

var keyValue = GetKey(key);

var value = builder.MemoryCache.Get(keyValue) as V;

if (!IsValid(value))

{

value = CreateValue(key);

SetImpl(keyValue, value);

}

return value;

}

/// <summary>

/// Gets a task to return an async value.

/// </summary>

/// <param name="key">A key.</param>

/// <returns>A cached value.</returns>

public async Task<V> GetAsync(K key)

{

var keyValue = GetKey(key);

var value = builder.MemoryCache.Get(keyValue) as V;

if (!IsValid(value))

{

value = await CreateValueAsync(key);

SetImpl(keyValue, value);

}

return value;

}

/// <summary>

/// Gets string key value for a key.

/// </summary>

/// <param name="key">A key.</param>

/// <returns>A string key value.</returns>

protected string GetKey(K key)

{

return builder.KeyFunc != null ? builder.KeyFunc(key) :

key == null ? null : key.ToString();

}

/// <summary>

/// Creates a value for a key.

/// </summary>

/// <param name="key">A key to create value for.</param>

/// <returns>A value instance.</returns>

protected V CreateValue(K key)

{

return builder.Factory != null ? builder.Factory(key) :

builder.FactoryAsync(key).Result;

}

/// <summary>