Although the golden age of IE8 has already passed and Microsoft

already has stopped its support, this browser still occupies about

3% of the of the world market desktop browsers. Despite this, many big organisations still

use this browser for enterprise web applications. We may confirm this, since we deal with

such organisations around the world. Companies try to get rid of IE8, but this

often requires Windows upgrade and resources to re-test all their web applications. If such company has many web terminals with Windows 7 or even with XP, this task becames rather expensive. So, this process

advances rather slowly. Meanwhile, these organizations don't stop development of new

web applications that must work on new HTML5 browsers and on old IE8.

A year ago we had developed an

UIUpload AngularJS directive and service that simplifies file uploading in web applications with AngularJS client. It works as expected on all HTML5 browsers.

But few days ago, we were asked to help with file uploading from

AngularJS web application that will work in IE8. We've spent few hours in order to investigate

existing third-party AngularJS directives and components. Here are few of them:

All of these directives for IE8 degrade down to <form> and <iframe> and then track the uploading progress. These solutions don't allow to select files for old browsers. At the same time, our aim was to implement an AngularJS directive that allows selecting a file and perform uploading, which will work for IE8 and for new browsers too.

Since IE8 neither supports FormData nor File API, thus, the directive must work with DOM elements directly. In order to open file selection dialog we need to hide <input type="file"/> element and then to route client-side event to it. When a file is selected it is sent to a server as multipart/form-data message. The server's result will be caught by hidden <iframe> element and passed to the directive's controller.

After few attempts we've implemented the desired directive. The small VS2015 solution that demonstrates this directive and server-side file handler you may download here.

The key feature of this directive is emulation of replace and template directive's definition properties:

var directive =

{

restrict: "AE",

scope:

{

id: "@",

serverUrl: "@",

accept: "@?",

onSuccess: "&",

onError: "&?",

},

link: function (scope, element, attrs, controller)

{

var id = scope.id || ("fileUpload" + scope.$id);

var template = "<iframe name='%id%$iframe' id='%id%$iframe' style='display: none;'>" +

"</iframe><form name='%id%$form' enctype='multipart/form-data' " +

"method='post' action='%action%' target='%id%$iframe'>" +

"<span style='position:relative;display:inline-block;overflow:hidden;padding:0;'>" +

"%html%<input type='file' name='%id%$file' id='%id%$file' " +

"style='position:absolute;height:100%;width:100%;left:-10px;top:-1px;z-index:100;" +

"font-size:50px;opacity:0;filter:alpha(opacity=0);'/></span></form>".

replace("%action%", scope.serverUrl).

replace("%html%", element.html()).

replace(/%id%/g, id);

element.replaceWith(template);

...

}

}

We used such emulation, since each directive instance (an element) must have unique name and ID in order to work properly. On the one hand template that returned by function should have a root element when you use replace. On the other hand, IE8 doesn't like such root element (e.g. we've not succeeded to dispatch the click javascript event to the <input> element).

The usage of the directive looks like as our previous example (see UIUpload):

<a file-upload=""

class="btn btn-primary"

accept=".*"

server-url="api/upload"

on-success="controller.uploadSucceed(data, fileName)"

on-error="controller.uploadFailed(e)">Click here to upload file</a>

Where:

- accept

- is a comma separated list of acceptable file extensions.

- server-url

-

is server URL where to upload the selected file.

In case when there is no "server-url" attribute the content of selected file will be

passed to success handler as a data URI.

- on-success

- A "success" handler, which is called when upload is finished successfully.

- on-error

- An "error" handler, which is called when upload is failed.

We hope this simple directive may help to keep calm for those of you who is forced to deal with IE8 and more advanced browsers at the same time.

While reading on ASP.NET Core Session, and analyzing the difference with previous version of ASP.NET we bumped into a problem...

At Managing Application State

they note:

Session is non-locking, so if two requests both attempt to modify the contents of session, the last one will win. Further, Session is implemented as a coherent session, which means that all of the contents are stored together. This means that if two requests are modifying different parts of the session (different keys), they may still impact each other.

This is different from previous versions of ASP.NET where session was blocking, which meant that if you had multiple concurrent requests to the session, then all requests were synchronized. So, you could keep consistent state.

In ASP.NET Core you have no built-in means to keep a consistent state of the session. Even assurances that the session is coherent does not help in any way.

You options are:

- build your own synchronization to deal with this problem (e.g. around the database);

- decree that your application cannot handle concurrent requests to the same session, so client should not attempt it, otherwise behaviour is undefined.

Some of our latest projects used file uploading feature. Whether this is an excel, an audio or an image file, the file uploading mechanism remains the same. In a web application an user selects a file and uploads it to the server. Browser sends this file as a multipart-form file attachment, which is then handled on server.

The default HTML way to upload file to server is to use <input type="file"> element on a form. The rendering of such element is different in different browsers and looks rather ugly. Thus, almost all well known javascript libraries like JQuery, Kendo UI etc. provide their own implementations of file upload component. The key statement here is "almost", since in AngularJS bootstrap we didn't find anything like that. It worth to say that we've found several third-party implementations for file upload, but they either have rather complex implementation for such simple task or don't provide file selection feature. This is the reason why we've decided to implement this task by ourselves.

Sources of our solution with upload-link directive and uiUploader service you may find here.

Their usage is rather simple.

E.g. for upload-ink directive:

<a upload-link

class="btn btn-primary"

accept=".*"

server-url="api/upload"

on-success="controller.uploadSucceed(data, file)"

on-error="controller.uploadFailed(e)">Click here to upload an image</a>

Where:

- accept

- is a comma separated list of acceptable file extensions.

- server-url

-

is server URL where to upload the selected file.

In case when there is no "server-url" attribute the content of selected file will be

passed to success handler as a data URI.

- on-success

- A "success" handler, which is called when upload is finished successfully.

- on-error

- An "error" handler, which is called when upload is failed.

Usage of uiUploader service is also easy:

uiUploader.selectAndUploadFile("api/upload", ".jpg,.png,.gif").

then(

function(result)

{

// TODO: handle the result.

// result.data - contains the server response

// result.file - contains uploaded File or Blob instance.

},

$log.error);

In case when the first parameter is null the result.data contains the selected file content as a

data URI.

In one of our last projects we were dealing with audio: capture audio in browser, store it on server and then return it by a request and replay in browser.

Though an audio capturing is by itself rather interesting and challenging task, it's addressed by HTML5, so for example take a look at this article. Here we share our findings about other problem, namely an audio conversion.

You might thought that if you have already captured an audio in browser then you will be able to play back it. Thus no additional audio conversion is required.

In practice we are limited by support of various audio formats in browsers. Browsers can capture audio in WAV format, but this format is rather heavy for storing and streaming back. Moreover, not all browsers support this format for playback. See wikipedia for details. There are only two audio formats that more or less widely supported by mainstream browsers: MP3 and AAC. So, you have either convert WAV to MP3, or to AAC.

The obvious choice is to select WAV to MP3 conversion, the benefit that there are many libraries and APIs for such conversion. But in this case you risk falling into a trap with MP3 licensing, especially if you deal with iteractive software products.

Eventually, you will come to the only possible solution (at least for moment of writting) - conversion WAV to AAC.

The native solution is to use NAudio library, which behind the scene uses Media Foundation Transforms. You'll shortly get a working example. Actually the core of solution will contain few lines only:

var source = Path.Combine(root, "audio.wav");

var target = Path.Combine(root, "audio.m4a");

using(var reader = new NAudio.Wave.WaveFileReader(source))

{

MediaFoundationEncoder.EncodeToAac(reader, target);

}

Everything is great. You'll deploy your code on server (by the way server must be Windows Server 2008R2 or higher) and at this point you may find that your code fails. The problem is that Media Foundation API is not preinstalled as a part of Windows Server installation, and must be installed separately. If you own this server then everything is all right, but in case you use a public web hosting server then you won't have ability to install Media Foundation API and your application will never work properly. That's what happened to us...

After some research we came to conclusion that another possible solution is a wrapper around an open source video/audio converter - FFPEG. There were two issues with this solution:

- how to execute ffmpeg.exe on server asynchronously;

- how to limit maximum parallel requests to conversion service.

All these issues were successfully resolved in our prototype conversion service that you may see here, with source published on github. The solution is Web API based REST service with simple client that uploads audio files using AJAX requests to server and plays it back. As a bonus this solution allows us perform not only WAV to AAC conversions, but from many others format to AAC without additional efforts.

Let's take a close look at crucial details of this solution. The core is FFMpegWrapper class that allows to run ffmpeg.exe asynchronously:

/// <summary>

/// A ffmpeg.exe open source utility wrapper.

/// </summary>

public class FFMpegWrapper

{

/// <summary>

/// Creates a wrapper for ffmpeg utility.

/// </summary>

/// <param name="ffmpegexe">a real path to ffmpeg.exe</param>

public FFMpegWrapper(string ffmpegexe)

{

if (!string.IsNullOrEmpty(ffmpegexe) && File.Exists(ffmpegexe))

{

this.ffmpegexe = ffmpegexe;

}

}

/// <summary>

/// Runs ffmpeg asynchronously.

/// </summary>

/// <param name="args">determines command line arguments for ffmpeg.exe</param>

/// <returns>

/// asynchronous result with ProcessResults instance that contains

/// stdout, stderr and process exit code.

/// </returns>

public Task<ProcessResults> Run(string args)

{

if (string.IsNullOrEmpty(ffmpegexe))

{

throw new InvalidOperationException("Cannot find FFMPEG.exe");

}

//create a process info object so we can run our app

var info = new ProcessStartInfo

{

FileName = ffmpegexe,

Arguments = args,

CreateNoWindow = true

};

return ProcessEx.RunAsync(info);

}

private string ffmpegexe;

}

It became possible to run a process asynchronously thanks to James Manning and his ProcessEx class.

Another useful part is a semaphore declaration in Global.asax.cs:

public class WebApiApplication : HttpApplication

{

protected void Application_Start()

{

GlobalConfiguration.Configure(WebApiConfig.Register);

}

/// <summary>

/// Gets application level semaphore that controls number of running

/// in parallel FFMPEG utilities.

/// </summary>

public static SemaphoreSlim Semaphore

{

get { return semaphore; }

}

private static SemaphoreSlim semaphore;

static WebApiApplication()

{

var value =

ConfigurationManager.AppSettings["NumberOfConcurentFFMpegProcesses"];

int intValue = 10;

if (!string.IsNullOrEmpty(value))

{

try

{

intValue = System.Convert.ToInt32(value);

}

catch

{

// use the default value

}

}

semaphore = new SemaphoreSlim(intValue, intValue);

}

}

And the last piece is the entry point, which was implemented as a REST controller:

/// <summary>

/// A controller to convert audio.

/// </summary>

public class AudioConverterController : ApiController

{

/// <summary>

/// Gets ffmpeg utility wrapper.

/// </summary>

public FFMpegWrapper FFMpeg

{

get

{

if (ffmpeg == null)

{

ffmpeg = new FFMpegWrapper(

HttpContext.Current.Server.MapPath("~/lib/ffmpeg.exe"));

}

return ffmpeg;

}

}

/// <summary>

/// Converts an audio in WAV, OGG, MP3 or other formats

/// to AAC format (MP4 audio).

/// </summary>

/// <returns>A data URI as a string.</returns>

[HttpPost]

public async Task<string> ConvertAudio([FromBody]string audio)

{

if (string.IsNullOrEmpty(audio))

{

throw new ArgumentException(

"Invalid audio stream (probably the input audio is too big).");

}

var tmp = Path.GetTempFileName();

var root = tmp + ".dir";

Directory.CreateDirectory(root);

File.Delete(tmp);

try

{

var start = audio.IndexOf(':');

var end = audio.IndexOf(';');

var mimeType = audio.Substring(start + 1, end - start - 1);

var ext = mimeType.Substring(mimeType.IndexOf('/') + 1);

var source = Path.Combine(root, "audio." + ext);

var target = Path.Combine(root, "audio.m4a");

await WriteToFileAsync(audio, source);

switch (ext)

{

case "mpeg":

case "mp3":

case "wav":

case "wma":

case "ogg":

case "3gp":

case "amr":

case "aif":

case "mid":

case "au":

{

await WebApiApplication.Semaphore.WaitAsync();

var result = await FFMpeg.Run(

string.Format(

"-i {0} -c:a libvo_aacenc -b:a 96k {1}",

source,

target));

WebApiApplication.Semaphore.Release();

if (result.Process.ExitCode != 0)

{

throw new InvalidDataException(

"Cannot convert this audio file to audio/mp4.");

}

break;

}

default:

{

throw new InvalidDataException(

"Mime type: '" + mimeType + "' is not supported.");

}

}

var buffer = await ReadAllBytes(target);

var response = "data:audio/mp4;base64," + System.Convert.ToBase64String(buffer);

return response;

}

finally

{

Directory.Delete(root, true);

}

}

For those who'd like to read more about audio conversion, we may suggest to read this article.

Earlier this year Mike Wasson has published a post: "Dependency Injection in ASP.NET Web API 2" that describes Web API's approach to the Dependency Injection design pattern.

In short it goes like this:

- Web API provides a primary integration point through

HttpConfiguration.DependencyResolver property, and tries to obtain many services through this resolver;

- Web API suggests to use your favorite Dependecy Injection library through the integration point. Author lists following libraries: Unity (by Microsoft), Castle Windsor, Spring.Net, Autofac, Ninject, and StructureMap.

The Unity Container (Unity) is a lightweight, extensible dependency injection container. There are Nugets both for Unity library and for Web API integration.

Now to the point of this post.

Unity defines a hierarchy of injection scopes. In Web API they are usually mapped to application and request scopes. This way a developer can inject application singletons, create request level, or transient objects.

Everything looks reasonable. The only problem we have found is that there is no way you to inject Web API objects like HttpConfiguration, HttpControllerContext or request's CancellationToken, as they are never registered for injection.

To workaround this we have created a small class called UnityControllerActivator that perfroms required registration:

using System;

using System.Net.Http;

using System.Threading;

using System.Threading.Tasks;

using System.Web.Http.Controllers;

using System.Web.Http.Dispatcher;

using Microsoft.Practices.Unity;

/// <summary>

/// Unity controller activator.

/// </summary>

public class UnityControllerActivator: IHttpControllerActivator

{

/// <summary>

/// Creates an UnityControllerActivator instance.

/// </summary>

/// <param name="activator">Base activator.</param>

public UnityControllerActivator(IHttpControllerActivator activator)

{

if (activator == null)

{

throw new ArgumentException("activator");

}

this.activator = activator;

}

/// <summary>

/// Creates a controller wrapper.

/// </summary>

/// <param name="request">A http request.</param>

/// <param name="controllerDescriptor">Controller descriptor.</param>

/// <param name="controllerType">Controller type.</param>

/// <returns>A controller wrapper.</returns>

public IHttpController Create(

HttpRequestMessage request,

HttpControllerDescriptor controllerDescriptor,

Type controllerType)

{

return new Controller

{

activator = activator,

controllerType = controllerType

};

}

/// <summary>

/// Base controller activator.

/// </summary>

private readonly IHttpControllerActivator activator;

/// <summary>

/// A controller wrapper.

/// </summary>

private class Controller: IHttpController, IDisposable

{

/// <summary>

/// Base controller activator.

/// </summary>

public IHttpControllerActivator activator;

/// <summary>

/// Controller type.

/// </summary>

public Type controllerType;

/// <summary>

/// A controller instance.

/// </summary>

public IHttpController controller;

/// <summary>

/// Disposes controller.

/// </summary>

public void Dispose()

{

var disposable = controller as IDisposable;

if (disposable != null)

{

disposable.Dispose();

}

}

/// <summary>

/// Executes an action.

/// </summary>

/// <param name="controllerContext">Controller context.</param>

/// <param name="cancellationToken">Cancellation token.</param>

/// <returns>Response message.</returns>

public Task<HttpResponseMessage> ExecuteAsync(

HttpControllerContext controllerContext,

CancellationToken cancellationToken)

{

if (controller == null)

{

var request = controllerContext.Request;

var container = request.GetDependencyScope().

GetService(typeof(IUnityContainer)) as IUnityContainer;

if (container != null)

{

container.RegisterInstance<HttpControllerContext>(controllerContext);

container.RegisterInstance<HttpRequestMessage>(request);

container.RegisterInstance<CancellationToken>(cancellationToken);

}

controller = activator.Create(

request,

controllerContext.ControllerDescriptor,

controllerType);

}

controllerContext.Controller = controller;

return controller.ExecuteAsync(controllerContext, cancellationToken);

}

}

}

Note on how it works.

IHttpControllerActivator is a controller factory, which Web API uses to create new controller instances using IHttpControllerActivator.Create(). Later controller's IHttpController.ExecuteAsync() is called to run the logic.-

UnityControllerActivator replaces original controller activator with a wrapper that delays creation (injection) of real controller untill request objects are registered in the scope

To register this class one need to update code in the UnityWebApiActivator.cs (file added with nuget Unity.AspNet.WebApi)

public static class UnityWebApiActivator

{

/// <summary>Integrates Unity when the application starts.<summary>

public static void Start()

{

var config = GlobalConfiguration.Configuration;

var container = UnityConfig.GetConfiguredContainer();

container.RegisterInstance<HttpConfiguration>(config);

container.RegisterInstance<IHttpControllerActivator>(

new UnityControllerActivator(config.Services.GetHttpControllerActivator()));

config.DependencyResolver = UnityHierarchicalDependencyResolver(container);

}

...

}

With this addition we have simplified the boring problem with passing of CancellationToken all around the code, as controller (and other classes) just declared a property to inject:

public class MyController: ApiController

{

[Dependency]

public CancellationToken CancellationToken { get; set; }

[Dependency]

public IModelContext Model { get; set; }

public async Task<IEnumerable<Products>> GetProducts(...)

{

...

}

public async Task<IEnumerable<Customer>> GetCustomer(...)

{

...

}

...

}

...

public class ModelContext: IModelContext

{

[Dependency]

public CancellationToken CancellationToken { get; set; }

...

}

And finally to perform unit tests for controllers with Depenency Injection you can use a code like this:

using System.Threading;

using System.Threading.Tasks;

using System.Web.Http;

using System.Web.Http.Controllers;

using System.Web.Http.Dependencies;

using System.Net.Http;

using Microsoft.Practices.Unity;

using Microsoft.Practices.Unity.WebApi;

using Microsoft.VisualStudio.TestTools.UnitTesting;

[TestClass]

public class MyControllerTest

{

[ClassInitialize]

public static void Initialize(TestContext context)

{

config = new HttpConfiguration();

Register(config);

}

[ClassCleanup]

public static void Cleanup()

{

config.Dispose();

}

[TestMethod]

public async Task GetProducts()

{

var controller = CreateController<MyController>();

//...

}

public static T CreateController<T>(HttpRequestMessage request = null)

where T: ApiController

{

if (request == null)

{

request = new HttpRequestMessage();

}

request.SetConfiguration(config);

var controllerContext = new HttpControllerContext()

{

Configuration = config,

Request = request

};

var scope = request.GetDependencyScope();

var container = scope.GetService(typeof(IUnityContainer))

as IUnityContainer;

if (container != null)

{

container.RegisterInstance<HttpControllerContext>(controllerContext);

container.RegisterInstance<HttpRequestMessage>(request);

container.RegisterInstance<CancellationToken>(CancellationToken.None);

}

T controller = scope.GetService(typeof(T)) as T;

controller.Configuration = config;

controller.Request = request;

controller.ControllerContext = controllerContext;

return controller;

}

public static void Register(HttpConfiguration config)

{

config.DependencyResolver = CreateDependencyResolver(config);

}

public static IDependencyResolver CreateDependencyResolver(HttpConfiguration config)

{

var container = new UnityContainer();

container.RegisterInstance<HttpConfiguration>(config);

// TODO: configure Unity contaiener.

return new UnityHierarchicalDependencyResolver(container);

}

public static HttpConfiguration config;

}

P.S. To those who think Dependency Injection is an universal tool, please read the article: Dependency Injection is Evil.

In the article "Error handling in WCF based web applications"

we've shown a custom error handler for RESTful service

based on WCF. This time we shall do the same for Web API 2.1 service.

Web API 2.1 provides an elegant way to implementat custom error handlers/loggers, see

the following article. Web API permits many error loggers followed by a

single error handler for all uncaught exceptions. A default error handler knows to output an error both in XML and JSON formats depending on requested

MIME type.

In our projects we use unique error reference IDs. This feature allows to an end-user to refer to any error that has happened during the application life time and pass such error ID to the technical support for further investigations. Thus, error details passed to the client-side contain an ErrorID field. An error logger generates ErrorID and passes it over to an error handler for serialization.

Let's look at our error handling implementation for a Web API application.

The first part is an implementation of IExceptionLogger

interface. It assigns ErrorID and logs all errors:

/// Defines a global logger for unhandled exceptions.

public class GlobalExceptionLogger : ExceptionLogger

{

/// Writes log record to the database synchronously.

public override void Log(ExceptionLoggerContext context)

{

try

{

var request = context.Request;

var exception = context.Exception;

var id = LogError(

request.RequestUri.ToString(),

context.RequestContext == null ?

null : context.RequestContext.Principal.Identity.Name,

request.ToString(),

exception.Message,

exception.StackTrace);

// associates retrieved error ID with the current exception

exception.Data["NesterovskyBros:id"] = id;

}

catch

{

// logger shouldn't throw an exception!!!

}

}

// in the real life this method may store all relevant info into a database.

private long LogError(

string address,

string userid,

string request,

string message,

string stackTrace)

{

...

}

}

The second part is the implementation of IExceptionHandler:

/// Defines a global handler for unhandled exceptions.

public class GlobalExceptionHandler : ExceptionHandler

{

/// This core method should implement custom error handling, if any.

/// It determines how an exception will be serialized for client-side processing.

public override void Handle(ExceptionHandlerContext context)

{

var requestContext = context.RequestContext;

var config = requestContext.Configuration;

context.Result = new ErrorResult(

context.Exception,

requestContext == null ? false : requestContext.IncludeErrorDetail,

config.Services.GetContentNegotiator(),

context.Request,

config.Formatters);

}

/// An implementation of IHttpActionResult interface.

private class ErrorResult : ExceptionResult

{

public ErrorResult(

Exception exception,

bool includeErrorDetail,

IContentNegotiator negotiator,

HttpRequestMessage request,

IEnumerable<MediaTypeFormatter> formatters) :

base(exception, includeErrorDetail, negotiator, request, formatters)

{

}

/// Creates an HttpResponseMessage instance asynchronously.

/// This method determines how a HttpResponseMessage content will look like.

public override Task<HttpResponseMessage> ExecuteAsync(CancellationToken cancellationToken)

{

var content = new HttpError(Exception, IncludeErrorDetail);

// define an additional content field with name "ErrorID"

content.Add("ErrorID", Exception.Data["NesterovskyBros:id"] as long?);

var result =

ContentNegotiator.Negotiate(typeof(HttpError), Request, Formatters);

var message = new HttpResponseMessage

{

RequestMessage = Request,

StatusCode = result == null ?

HttpStatusCode.NotAcceptable : HttpStatusCode.InternalServerError

};

if (result != null)

{

try

{

// serializes the HttpError instance either to JSON or to XML

// depend on requested by the client MIME type.

message.Content = new ObjectContent<HttpError>(

content,

result.Formatter,

result.MediaType);

}

catch

{

message.Dispose();

throw;

}

}

return Task.FromResult(message);

}

}

}

Last, but not least part of this solution is registration and configuration of the error logger/handler:

/// WebApi congiguation.

public static class WebApiConfig

{

public static void Register(HttpConfiguration config)

{

...

// register the exception logger and handler

config.Services.Add(typeof(IExceptionLogger), new GlobalExceptionLogger());

config.Services.Replace(typeof(IExceptionHandler), new GlobalExceptionHandler());

// set error detail policy according with value from Web.config

var customErrors =

(CustomErrorsSection)ConfigurationManager.GetSection("system.web/customErrors");

if (customErrors != null)

{

switch (customErrors.Mode)

{

case CustomErrorsMode.RemoteOnly:

{

config.IncludeErrorDetailPolicy = IncludeErrorDetailPolicy.LocalOnly;

break;

}

case CustomErrorsMode.On:

{

config.IncludeErrorDetailPolicy = IncludeErrorDetailPolicy.Never;

break;

}

case CustomErrorsMode.Off:

{

config.IncludeErrorDetailPolicy = IncludeErrorDetailPolicy.Always;

break;

}

default:

{

config.IncludeErrorDetailPolicy = IncludeErrorDetailPolicy.Default;

break;

}

}

}

}

}

The client-side error handler remain almost untouched. The implementation details you may find in

/Scripts/api/api.js and Scripts/controls/error.js files.

You may download the demo project here.

Feel free to use this solution in your .NET projects.

Although WCF REST service + JSON is outdated comparing to Web API, there are yet a lot of such solutions (and probably will appear new ones) that use such "old" technology.

One of the crucial points of any web application is an error handler that allows gracefully resolve server-side exceptions and routes them as JSON objects to the client for further processing. There are dozen approachesin Internet that solve this issue (e.g. http://blog.manglar.com/how-to-provide-custom-json-exceptions-from-as-wcf-service/), but there is no one that demonstrates error handling ot the client-side. We realize that it's impossible to write something general that suits for every web application, but we'd like to show a client-side error handler that utilizes JSON and KendoUI.

On our opinion, the successfull error handler must display an understandable error message on one hand, and on the other hand it has to provide technical info for developers in order to investigate the exception reason (and to fix it, if need):

You may download demo project here. It contains three crucial parts:

- A server-side error handler that catches all exceptions and serializes them as JSON objects (see /Code/JsonErrorHandler.cs and /Code/JsonWebHttpBehaviour.cs).

- An error dialog that's based on user-control defined in previous articles (see /scripts/controls/error.js, /scripts/controls/error.resources.js and /scripts/templates/error.tmpl.html).

- A client-side error handler that displays errors in user-friendly's manner (see /scripts/api/api.js, method defaultErrorHandler()).

Of course this is only a draft solution, but it defines a direction for further customizations in your web applications.

Kendo UI Docs contains an article "How To:

Load Templates from External Files", where authors review two way of dealing

with Kendo UI templates.

While using Kendo UI we have found our own answer to: where will the Kendo

UI templates be defined and maintained?

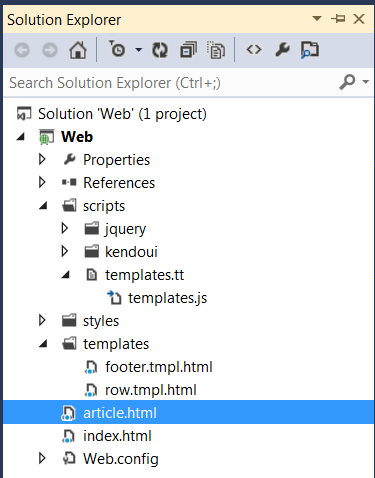

In our .NET project we have decided to keep templates separately, and to store

them under the "templates" folder. Those templates are in fact include html,

head, and stylesheet links. This is to help us to present those tempates in the

design view.

In our scripts folder, we have defined a small text transformation template:

"templates.tt", which produces "templates.js" file. This template takes body

contents of each "*.tmpl.html" file from "templates" folder and builds string of

the form:

document.write('<script id="footer-template" type="text/x-kendo-template">...</script><script id="row-template" type="text/x-kendo-template">...</script>');

In our page that uses templates, we include "templates.js":

<!DOCTYPE html>

<html>

<head>

<script

src="scripts/templates.js"></script>

...

Thus, we have:

- clean separation of templates and page content;

- automatically generated templates include file.

WebTemplates.zip contains a web project demonstrating our technique. "templates.tt" is

text template transformation used in the project.

See also: Compile KendoUI templates.

If you deal with

web applications you probably have already dealt with export data to Excel.

There are several options to prepare data for Excel:

- generate CSV;

- generate HTML that excel understands;

- generate XML in Spreadsheet 2003 format;

- generate data using Open XML SDK or some other 3rd party libraries;

- generate data in XLSX format, according to Open XML specification.

You may find a good article with pros and cons of each solution

here. We, in our turn, would like to share our experience in this field. Let's start from requirements:

- Often we have to export huge data-sets.

- We should be able to format, parametrize and to apply different styles to the exported data.

- There are cases when exported data may contain more than one table per sheet or

even more than one sheet.

- Some exported data have to be illustrated with charts.

All these requirements led us to a solution based on XSLT processing of streamed data.

The advantage of this solution is that the result is immediately forwarded to a client as fast as

XSLT starts to generate output. Such approach is much productive than generating of XLSX using of Open XML SDK or any other third party library, since it avoids keeping

a huge data-sets in memory on the server side.

Another advantage - is simple maintenance, as we achieve

clear separation of data and presentation layers. On each request to change formatting or

apply another style to a cell you just have to modify xslt file(s) that generate

variable parts of XLSX.

As result, our clients get XLSX files according with Open XML specifications.

The details of implementations of our solution see in our next posts.

Earlier we have shown

how to build streaming xml reader from business data and have reminded about

ForwardXPathNavigator which helps to create

a streaming xslt transformation. Now we want to show how to stream content

produced with xslt out of WCF service.

To achieve streaming in WCF one needs:

1. To configure service to use streaming. Description on how to do this can be

found in the internet. See web.config of the sample

Streaming.zip for the details.

2. Create a service with a method returning Stream:

[ServiceContract(Namespace = "http://www.nesterovsky-bros.com")]

[AspNetCompatibilityRequirements(RequirementsMode = AspNetCompatibilityRequirementsMode.Allowed)]

public class Service

{

[OperationContract]

[WebGet(RequestFormat = WebMessageFormat.Json)]

public Stream GetPeopleHtml(int count,

int seed)

{

...

}

}

2. Return a Stream from xsl transformation.

Unfortunately (we mentioned it already), XslCompiledTransform generates its

output into XmlWriter (or into output Stream) rather than exposes result as

XmlReader, while WCF gets input stream and passes it to a client.

We could generate xslt output into a file or a memory Stream and then return

that content as input Stream, but this will defeat a goal of streaming, as

client would have started to get data no earlier that the xslt completed its

work. What we need instead is a pipe that form xslt output Stream to an input

Stream returned from WCF.

.NET implements pipe streams, so our task is trivial.

We have defined a utility method that creates an input Stream from a generator

populating an output Stream:

public static Stream GetPipedStream(Action<Stream> generator)

{

var output = new AnonymousPipeServerStream();

var input = new AnonymousPipeClientStream(

output.GetClientHandleAsString());

Task.Factory.StartNew(

() =>

{

using(output)

{

generator(output);

output.WaitForPipeDrain();

}

},

TaskCreationOptions.LongRunning);

return input;

}

We wrapped xsl transformation as such a generator:

[OperationContract]

[WebGet(RequestFormat = WebMessageFormat.Json)]

public Stream GetPeopleHtml(int count, int seed)

{

var context = WebOperationContext.Current;

context.OutgoingResponse.ContentType = "text/html";

context.OutgoingResponse.Headers["Content-Disposition"] =

"attachment;filename=reports.html";

var cache = HttpRuntime.Cache;

var path = HttpContext.Current.Server.MapPath("~/People.xslt");

var transform = cache[path] as XslCompiledTransform;

if (transform == null)

{

transform = new XslCompiledTransform();

transform.Load(path);

cache.Insert(path, transform, new CacheDependency(path));

}

return Extensions.GetPipedStream(

output =>

{

// We have a streamed business data.

var people = Data.CreateRandomData(count, seed, 0, count);

// We want to see it as streamed xml data.

using(var stream =

people.ToXmlStream("people", "http://www.nesterovsky-bros.com"))

using(var reader = XmlReader.Create(stream))

{

// XPath forward navigator is used as an input source.

transform.Transform(

new ForwardXPathNavigator(reader),

new XsltArgumentList(),

output);

}

});

}

This way we have build a code that streams data directly from business data to a

client in a form of report. A set of utility functions and classes helped us to

overcome .NET's limitations and to build simple code that one can easily

support.

The sources can be found at

Streaming.zip.

For a long time we were developing web applications with ASP.NET and JSF. At

present we prefer rich clients and a server with page templates and RESTful web

services.

This transition brings technical questions. Consider this one.

Browsers allow to store session state entirely on the client, so should we

maintain a session on the server?

Since the server is just a set of web services, so we may supply all required

arguments on each call.

At first glance we can assume that no session is required on the server.

However, looking further we see that we should deal with data validation

(security) on the server.

Think about a classic ASP.NET application, where a user can select a value from

a dropdown. Either ASP.NET itself or your program (against a list from a

session) verifies that the value received is valid for the user. That list of

values and might be other parameters constitute a user profile, which we stored

in session. The user profile played important role (often indirectly) in the

validation of input data.

When the server is just a set of web services then we have to validate all

parameters manually. There are two sources that we can rely to: (a)

a session, (b)

a user principal.

The case (a) is very similar to classic ASP.NET application except that with

EnableEventValidation="true" runtime did it for us most of the time.

The case (b) requires reconstruction of the user profile for a user principal

and then we proceed with validation of parameters.

We may cache user profile in session, in which case we reduce (b) to (a); on the

other hand we may cache user profile in

Cache, which is also similar to (a) but which might be lighter than (at least not

heavier than) the solution with the session.

What we see is that the client session does not free us from server session (or

its alternative).

It has happened so, that we have never worked with jQuery, however were aware of

it.

In early 2000 we have developed a web application that contained rich javascript

APIs, including UI components. Later, we were actively practicing in ASP.NET, and

later in JSF.

At present, looking at jQuery more closely we regret that we have failed to

start using it earlier.

Separation of business logic and presentation is remarkable when one uses JSON

web services. In fact server part can be seen as a set of web services

representing a business logic and a set of resources: html, styles, scripts,

others. Nor ASP.NET or JSF approach such a consistent separation.

The only trouble, in our opinion, is that jQuery has no standard data binding: a way to bind JSON data

to (and from) html controls. The technique that will probably be standardized is called jQuery Templates or JsViews

.

Unfortunatelly after reading about this

binding API, and

being in love with Xslt and XQuery we just want to cry. We don't know what would

be the best solution for the task, but what we see looks uncomfortable to us.

AjaxControlToolkit has methods to access ViewState:

protected V GetPropertyValue<V>(string propertyName, V nullValue)

{

if (this.ViewState[propertyName] == null)

{

return nullValue;

}

return (V) this.ViewState[propertyName];

}

protected void SetPropertyValue<V>(string propertyName, V value)

{

this.ViewState[propertyName] = value;

}

...

public bool EnabledOnClient

{

get { return base.GetPropertyValue("EnabledOnClient", true); }

set { base.SetPropertyValue("EnabledOnClient", value); }

}

We find that code unnecessary complex and nonoptimal. Our code to access

ViewState looks like this:

public bool EnabledOnClient

{

get { return ViewState["EnabledOnClient"] as bool? ?? true); }

set { ViewState["EnabledOnClient"] = value; }

}

Already for a couple of days we're trying to create a UserControl containing a

TabContainer. To achieve the goal we have

created a page with a ToolkitScriptManager and a user control itself.

Page:

<form runat="server">

<ajax:ToolkitScriptManager ID="ToolkitScriptManager1" runat="server"/>

<uc1:WebUserControl1 ID="WebUserControl11" runat="server" />

</form>

User control:

<%@ Control Language="C#" %>

<%@ Register

Assembly="AjaxControlToolkit"

Namespace="AjaxControlToolkit"

TagPrefix="ajax" %>

<ajax:TabContainer ID="Tab" runat="server" Width="100%">

<ajax:TabPanel runat="server" HeaderText="Tab1" ID="Tab1">

<ContentTemplate>Panel 1</ContentTemplate>

</ajax:TabPanel>

</ajax:TabContainer>

What could be more simple?

But no, there is a problem. At run-time

control works perfectly, but at the

designer it shows an error instead of a normal design view:

Error Rendering Control - TabContainer1

An unhandled exception has occurred.

Could not find any resources appropriate for the specified culture or the neutral culture. Make sure "AjaxControlToolkit.Properties.Resources.NET4.resources" was correctly embedded or linked into assembly "AjaxControlToolkit" at compile time, or that all the satellite assemblies required are loadable and fully signed.

That's a stupid error, which says nothing about the real problem reason. We had

to attach a debugger to a Visual Studio just to realize what the problem is.

So, the error occurs at the following code of AjaxControlToolkit.ScriptControlBase:

private void EnsureScriptManager()

{

if (this._scriptManager == null)

{

this._scriptManager = ScriptManager.GetCurrent(this.Page);

if (this._scriptManager == null)

{

throw new HttpException(Resources.E_NoScriptManager);

}

}

}

Originally, the problem is due to the fact that ScriptManager is not found, and code

wants to report an HttpException, but fun is that we recieve a different exception, which is releted to a missing resouce text for a message Resources.E_NoScriptManager.

It turns out that E_NoScriptManager text is found neither in

primary no in resource assemblies.

As for original problem, it's hard to say about reason of why ScriptManager is

not available at design time. We, however, observed that a ScriptManager

registers itself for a ScriptManager.GetCurrent() method at

run-time only:

protected internal override void OnInit(EventArgs e)

{

...

if (!base.DesignMode)

{

...

iPage.Items[typeof(ScriptManager)] = this;

...

}

}

So, it's not clear what they (toolkit's developers) expected to get at design

time.

These observations leave uneasiness regarding the quality of the library.

It does not matter that DataBindExtender looks not usual in the ASP.NET. It turns to be so handy that built-in data binding is not considered to be an option.

After a short try, you uderstand that people tried very hard and have invented many controls and methods like ObjectDataSource, FormView, Eval(), and Bind() with outcome, which is very specific and limited.

In contrast DataBindExtender performs:

- Two or one way data binding of any business data property to any control property;

- Converts value before it's passed to the control, or into the business data;

- Validates the value.

See an example:

<asp:TextBox id=Field8 EnableViewState="false" runat="server"></asp:TextBox>

<bphx:DataBindExtender runat='server'

EnableViewState='false'

TargetControlID='Field8'

ControlProperty='Text'

DataSource='<%# Import.ClearingMemberFirm %>'

DataMember='Id'

Converter='<%# Converters.AsString("XXXXX", false) %>'

Validator='<%# (extender, value) => Functions.CheckID(value as string) %>'/>

Here, we beside a regualar two way data binding of a property Import.ClearingMemberFirm.Id to a property Field8.Text, format (parse) Converters.AsString("XXXXX", false), and finally validate an input value with a lambda function (extender, value) => Functions.CheckID(value as string).

DataBindExtender works also well in template controls like asp:Repeater, asp:GridView, and so on. Having your business data available, you may reduce a size of the ViewState with EnableViewState='false'. This way DataBindExtender approaches page development to a pattern called MVC.

Recently, we have found that it's also useful to have a way to run a javascript during the page load (e.g. you want to attach some client side event, or register a component). DataBindExtender provides this with OnClientInit property, which is a javascript to run on a client, where this refers to a DOM element:

... OnClientInit='$addHandler(this, "change", function() { handleEvent(event, "Field8"); } );'/>

allows us to attach onchange javascript event to the asp:TextBox.

So, meantime we're very satisfied with what we can achieve with DataBindExtender. It's more than JSF allows, and much more stronger and neater to what ASP.NET has provided.

The sources can be found at DataBindExtender.cs

Recently we were raising a question about serialization of ASPX output in xslt.

The question went like this:

What's the recommended way of ASPX page generation?

E.g.:

------------------------

<%@ Page AutoEventWireup="true"

CodeBehind="CurMainMenuP.aspx.cs"

EnableSessionState="True"

Inherits="Currency.CurMainMenuP"

Language="C#"

MaintainScrollPositionOnPostback="True"

MasterPageFile="Screen.Master" %>

<asp:Content ID="Content1" runat="server" ContentPlaceHolderID="Title">CUR_MAIN_MENU_P</asp:Content>

<asp:Content ID="Content2" runat="server" ContentPlaceHolderID="Content">

<span id="id1222146581" runat="server"

class="inputField system UpperCase" enableviewstate="false">

<%# Dialog.Global.TranCode %>

</span>

...

------------------------

Notice aspx page directives, data binding expessions, and prefixed tag names without namespace declarations.

There was a whole range of expected answers. We, however, looked whether somebody have already dealed with the task and has a ready solution at hands.

In general it seems that xslt community is very angry about ASPX: both format and technology. Well, put this aside.

The task of producing ASPX, which is almost xml, is not solvable when you're staying with pure xml serializer. Xslt's xsl:character-map does not work at all. In fact it looks as a childish attempt to address the problem, as it does not support character escapes but only grabs characters and substitutes them with strings.

We have decided to create ASPX serializer API producing required output text. This way you use <xsl:output method="text"/> to generate ASPX pages.

With this goal in mind we have defined a little xml schema to describe ASPX irregularities in xml form. These are:

<xs:element name="declared-prefix"> - to describe known prefixes, which should not be declared;

<xs:element name="directive"> - to describe directives like <%@ Page %>;

<xs:element name="content"> - a transparent content wrapper;

<xs:element name="entity"> - to issue xml entity;

<xs:element name="expression"> - to describe aspx expression like <%# Eval("A") %>;

<xs:element name="attribute"> - to describe an attribute of the parent element.

This approach greately simplified for us an ASPX generation process.

The API includes:

In previous posts we were crying about problems with JSF to ASP.NET migration. Let's point to another one.

Consider that you have an input field, whose value should be validated:

<input type="text" runat="server" ID="id1222146409" maxlength="4"/>

<bphx:DataBindExtender runat="server"

TargetControlID="id1222146409" ControlProperty="Value"

DataSource="<%# Import.AaControlAttributes %>"

DataMember="UserEnteredTrancode"/>

Here we have an input control, whose value is bound to Import.AaControlAttributes.UserEnteredTrancode property. But what is missed is a value validation. Somewhere we have a function that could answer the question whether the value is valid. It should be called like this: Functions.IsTransactionCodeValid(value).

Staying within standard components we can use a custom validator on the page:

<asp:CustomValidator runat="server"

ControlToValidate="id1222146409"

OnServerValidate="ValidateTransaction"

ErrorMessage="Invalid transaction code."/>

and add the following code-behind:

protected void ValidateTransaction(object source, ServerValidateEventArgs args)

{

args.IsValid = Functions.IsTransactionCodeValid(args.Value);

}

This approach works, however it pollutes the code-behind with many very similar methods. The problem is that the validation rules in most cases are not property of page but one of data model. That's why page validation methods just forward check to somewhere.

While thinking on how to simplify the code we have came up with more conscious and short way to express validators, namely using lambda functions. To that end we have introduced a Validator property of type ValueValidator over DataBindExtender. Where

/// <summary>A delegate to validate values.</summary>

/// <param name="extender">An extender instance.</param>

/// <param name="value">A value to validate.</param>

/// <returns>true for valid value, and false otherwise.</returns>

public delegate bool ValueValidator(DataBindExtender extender, object value);

/// <summary>An optional data member validator.</summary>

public virtual ValueValidator Validator { get; set; }

With this new property the page markup looks like this:

<input type="text" runat="server" ID="id1222146409" maxlength="4"/>

<bphx:DataBindExtender runat="server"

TargetControlID="id1222146409" ControlProperty="Value"

DataSource="<%# Import.AaControlAttributes %>"

DataMember="UserEnteredTrancode"

Validator='<%# (extender, value) => Functions.IsTransactionCodeValid(value as string) %>'

ErrorMessage="Invalid transaction code."/>

This is almost like an event handler, however it allowed us to call data model validation logic without unnecessary code-behind.

The updated DataBindExtender can be found at DataBindExtender.cs.

Being well behind of the latest news and traps of the ASP.NET, we're readily falling on each problem.

This time it's a script injection during data binding.

In JSF there is a component to output data called h:outputText. Its use is like this:

<span jsfc="h:outputText" value="#{myBean.myProperty}"/>

The output is a span element with data bound value embeded into content. The natural alternative in ASP.NET seems to be an asp:Label control:

<asp:Label runat="server" Text="<%# Eval("MyProperty") %>"/>

This almost works except that the h:outputText escapes data (you may override this and specify attribute escape="false"), and asp:Label never escapes the data.

This looks as a very serious omission in ASP.NET (in fact very close to a security hole). What are chances that when you're creating a new page, which uses data binding, you will not forget to fix code that wizard created for you and to change it to:

<asp:Label runat="server" Text="<%# Server.HtmlEncode(Eval("MyProperty")) %>"/>

Eh? Think what will happen if MyProperty will return a text that looks like a script (e.g.: <script>alert(1)</script>), while you just wanted to output a label?

To address the issue we've also introduced a property Escape into DataBindExtender. So at present we have a code like this:

<asp:Label runat="server" ID="MyLabel"/>

<bphx:DataBindExtender runat="server" TargetControlID="MyLabel"

ControlProperty="Text" ReadOnly="true" Escape="true"

DataSource="<%# MyBean %>" DataMember="MyProperty"/>

See also: A DataBindExtender, Experience of JSF to ASP.NET migration

After struggling with ASP.NET data binding we found no other way but to introduce our little extender control to address the issue.

We were trying to be minimalistic and to introduce two way data binding and to support data conversion. This way extender control (called DataBindExtender) have following page syntax:

<asp:TextBox id=TextBox1 runat="server"></asp:TextBox>

<cc1:DataBindExtender runat="server"

DataSource="<%# Data %>"

DataMember="ID"

TargetControlID="TextBox1"

ControlProperty="Text" />

Two way data binding is provided with DataSource object (notice data binding over this property) and a DataMember property from the one side, and TargetControlID and ControlProperty from the other side. DataBindExtender supports Converter property of type TypeConverter to support custom converters.

DataBindExtender is based on AjaxControlToolkit.ExtenderControlBase class and implements System.Web.UI.IValidator. ExtenderControlBase makes implementation of extenders extremely easy, while IValidator plugs natuarally into page validation (Validate method, Validators collections, ValidationSummary control).

The good point about extenders is that they are not visible in designer, while it exposes properties in extended control itself. The disadvantage is that it requires Ajax Control Toolkit, and also ScriptManager component of the page.

To simplify the use DataBindExtender gets data from control and puts the value into data source in Validate method, and puts data into control in OnPreRender method; thus no specific action is required to perform data binding.

Source for the DataBindExtender is DataBindExtender.cs.

We used to think that ASP.NET is a way too powerful than JSF. It might be still true, but not when you are accustomed to JSF and spoiled with its code practice...

Looking at both technologies from a greater distance, we now realize that they give almost the same level of comfort during development, but they are different. You can feel this after you were working for some time with one technology and now are to implement similar solution in opposite one. That is where we have found ourselves at present.

The funny thing is that we did expect some problems but in a different place. Indeed, both ASP.NET and JSF are means to define a page layout and to map input and output of business data. While with the presentation (controls, their compositions, masters, styles and so on) you can find more or less equal analogies, the differences of implementation of data binding is a kind of a pain.

We have found that data binding in ASP.NET is somewhat awkward. Its Eval and Bind is bearable in simple cases but almost unusable when you business data is less trivial, or if you have to apply custom data formatting.

In JSF, with its Expression Language, we can perform two way data binding for rather complex properties like ${data.items[index + 5].property}, or to create property adapters ${my:asSomething(data.bean, "property").Value}, or add standard or custom property converters. In contrast data binding in ASP.NET is limited to simple property path (no expressions are supported), neither custom formatters are supported (try to format number as a telephone number).

Things work well when you're designing ASP.NET application from scratch, as you naturally avoid pitfalls, however when you got existing business logic and need to expose it to the web, you have no other way but to write a lot of code behind just to smooth out the problems that ASP.NET exhibits.

Another solution would be to design something like extender control that would attach more proper data binding and formatting facilities to control properties. That would allow to make page definitions in more declarative way, like what we have now in JSF.

While porting a solution from JSF to ASP.NET we have seen an issue with synchronization of access to a data stored in a session from multiple requests.

Consider a case when you store a business object in a session.

Going through the request lifecycle we observe that this business object may be accessed at different stages: data binding, postback event handler, security filters, other.

Usually this business object is mutable and does not assume concurent access. Browsers, however, may easily issue multiple requests to the same session at the same time. In fact, such behaviour, is not even an exception, as browsers nowadays are often sending concurrent requests.

In the JSF we're using a sync object, which is part of business object itself; lock it and unlock at the begin and at the end of a request correspondingly. This works perfectly as JSF guarantees that:

- lock is released after it's acquired (we use request scope bean with

@PostConstruct and @PreDestroy annotations to lock and unlock);

- both lock and unlock take place in the same thread.

ASP.NET, in contrast, tries to be more asynchronous, and allows for different stages of request to take place in different threads. This could be seen indirectly in the documentation, which does not give any commitments in this regards, and with code inspection where you can see that request can begin in one thread, and a next stage can be queued for the execution into the other thread.

In addition, ASP.NET does not guarantee that if BeginRequest has been executed then EndRequest will also run.

The conclusion is that we should not use locks to synchronize access to the same session object, but rather try to invent other means to avoid data races.

Update msdn states:

Concurrent Requests and Session State

Access to ASP.NET session state is exclusive per session, which means that if two different users make concurrent requests, access to each separate session is granted concurrently. However, if two concurrent requests are made for the same session (by using the same SessionID value), the first request gets exclusive access to the session information. The second request executes only after the first request is finished. (The second session can also get access if the exclusive lock on the information is freed because the first request exceeds the lock time-out.)

This means that the required synchronization is already built into ASP.NET. That's good.

|